A basic task in thread synchronization is putting something on one thread and getting it out on another thread for further processing. Two or more threads of execution are accessing certain data, and in order to keep data consistent and solid the access is split into atomic operations which are only allowed for one thread at a time. Before one thread completes its thing, another is not allowed to touch stuff, such as waiting for so called wait state. This is what synchronization objects and critical sections in particular for. Furthermore, a thread which is waiting for stuff to be available has nothing to do, so it uses one of the wait functions to not waste CPU time, and both threads are using event or similar objects to notify and receive notifications waking up from wait state.

Let us see what is the cost of doing things not quite right. Let us take a send thread which is generating data/events which is locking shared resource and setting an event when something is done and requires another receive thread to wake up and take over. Send thread might be doing something like:

CComCritSecLock<CComAutoCriticalSection> DataLock(m_CriticalSection); m_nSendCount++; ATLVERIFY(m_AvailabilityEvent.Set());

And receive thread will wait and take over like this:

CComCritSecLock<CComAutoCriticalSection> DataLock(m_CriticalSection); m_nReceiveCount++; ATLVERIFY(m_AvailabilityEvent.Reset());

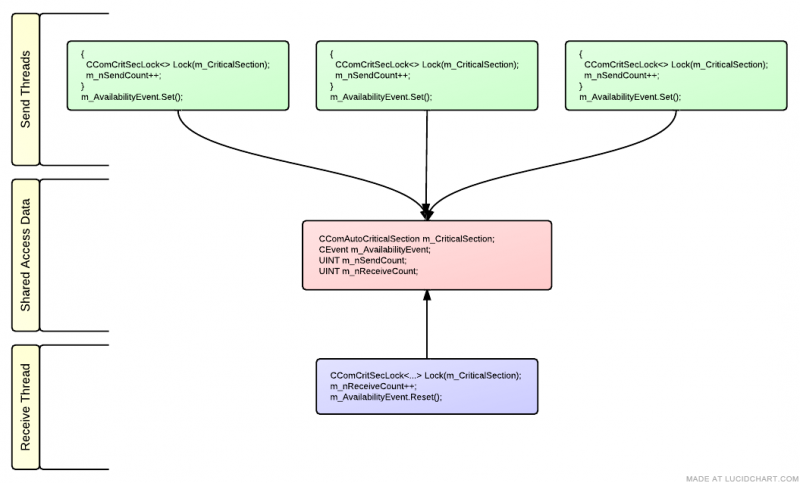

Let us have three send threads and one receive thread running in parallel:

The simplicity is tempting and having run this the result over 60 seconds is:

Send Count:       280,577,239 Receive Count:        703,238 Process Time:       User    14,539 ms, Kernel    60,746 ms Send Thread 0 Time: User     5,584 ms, Kernel    22,666 ms, Context Switches      5,449,926 Receive Thread Time: User       811 ms, Kernel     2,776 ms, Context Switches      8,836,263

One of the send threads performed 280M cycles (note the number is for one thread of the three, and also test code involves random delays so amount varies from execution to execution), while receive thread could only do 703K. The huge difference is definitely related to the behavior around critical section and thread wait time.

All four threads are locking critical section and making other threads to wait. However send threads are only setting the event, while receive thread is waiting for event and wake up on (that is, soon after) its setting. Once receive thread wakes up, its trying to enter critical section and acquire lock. Still, send threads are setting the event before leaving critical section, so if things happen too fast and receive thread tries to enter locked critical, it will fail up to eventually giving its time slice away doing a context switch.

This explains low number of iterations of the receive thread together with rather high amount of context switches.

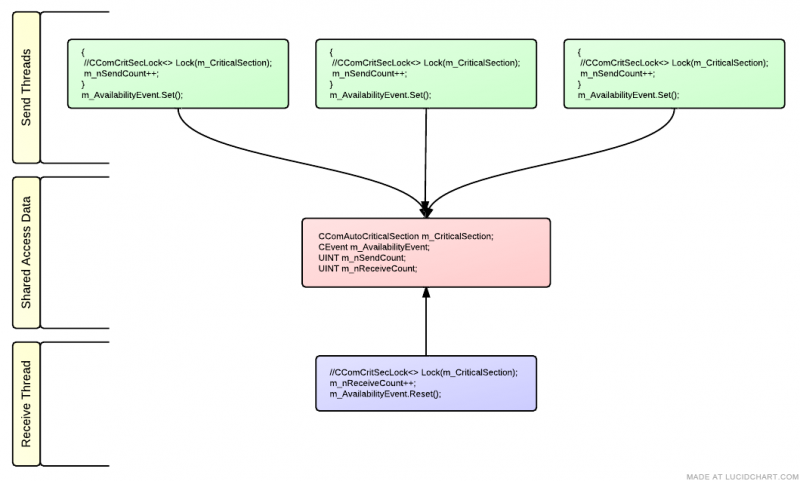

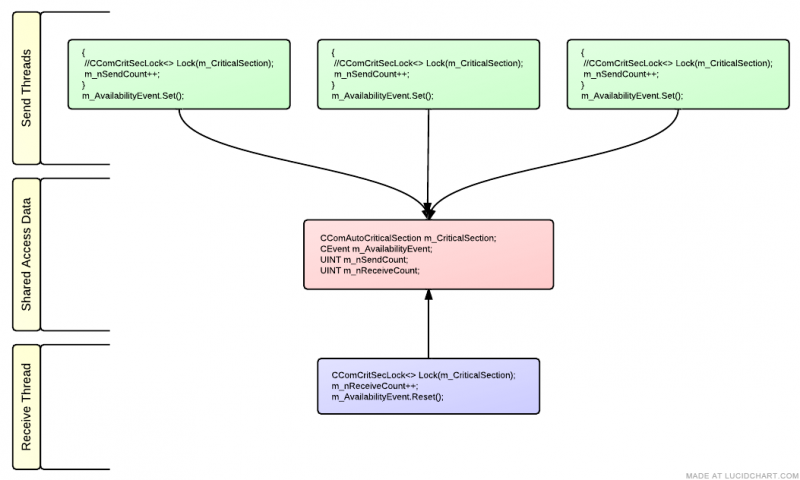

To address this problem let us modify sending part to set event from outside of critical section lock scope.

{ CComCritSecLock<CComAutoCriticalSection> DataLock(m_CriticalSection); m_nSendCount++; } ATLVERIFY(m_AvailabilityEvent.Set());

This makes sense too, event itself does not need to be signaled from inside protected fragment, sending thread might be doing this a bit later, with a small but acceptable chance that another send thread will set the event between leaving critical section and setting the event on original thread. From the point of view of receive thread this means that sometimes it might be entering protected area with result of work of 2+ threads and sometimes the even is set again after all work is done and there is nothing more to do.

Result of execution is:

Send Count:       233,620,244 Receive Count:     10,901,974 Process Time:       User    35,006 ms, Kernel    90,808 ms Send Thread 0 Time: User    10,654 ms, Kernel    27,190 ms, Context Switches      7,879,948 Receive Thread Time: User     3,338 ms, Kernel    12,012 ms, Context Switches      8,021,273

Apparently, the change had a positive effect on receive thread, which was able to make 15 times more iterations.

After all, how it compares to scenario when no critical section is involved at all? If synchronization is implemented using an alternate technique (such as, for example, interlocked variable access).

Removing critical section, the result of execution is:

Send Count:       246,133,642 Receive Count:     24,743,638 Process Time:       User    16,723 ms, Kernel   225,515 ms Send Thread 0 Time: User     4,461 ms, Kernel    56,519 ms, Context Switches          1,353 Receive Thread Time: User     3,354 ms, Kernel    56,019 ms, Context Switches         17,372

About the same number of send thread iterations and twice as many on receive thread. In the same time, amount of context switches dramatically dropped and indicates that this way the threads do not have to fall into wait state and wait one another. The threads do still wait for stop event (with zero timeout, that is they just check for it without any need to wait), and receive thread keep synchronizing to availability event.

Having done that, let us do one more test to check the effect of entering/leaving a critical section which is never owned by another thread. If send threads keep running without locking critical section, and receive thread is back to enter and leave it (on its own only, without congestions with other threads involved), how much slower the overall execution will be?

The results show that without concurrent access to the critical section, no extra context switches take place and the execution is similar to the one in previous test 3:

Send Count:       213,333,676 Receive Count:     18,542,098 Process Time:       User    15,022 ms, Kernel   227,496 ms Send Thread 0 Time: User     3,322 ms, Kernel    57,626 ms, Context Switches          2,786 Receive Thread Time: User     3,510 ms, Kernel    56,316 ms, Context Switches         10,616

Some conclusions:

- setting event for shared resource while still holding a lock of it might be pretty expensive

- critical sections are quite low-weight as promised and add minimal overhead unless congestion takes place

- critical sections might still create a bottleneck in case of intensive use on multiple threads, in which case alternatives such as slim reader/writer locks and interlocked variable access

- amount of thread’s context switches is a good indicator of execution congestions

Good luck with further research on synchronization and performance and here is source code for the test, a Visual Studio 2010 C++ project.

Obtaining information on amount of context switches for x64 code deserves a separate post, and the code is here, lines 18-144 within #pragma region.