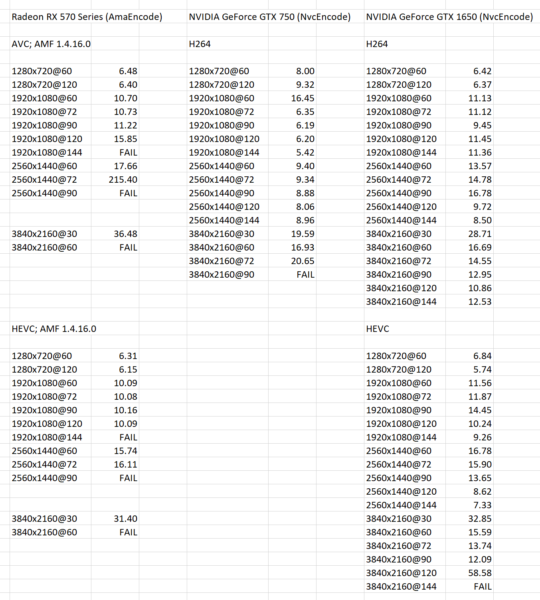

In continuation of previous AMD AMF encoder latency at faster settings posts, side by side comparison to NVIDIA encoders.

The numbers are to show how different they are even though they are doing something similar. The NVIDIA cards are not high end: GTX 1650 is literally the cheapest stuff among Turing 16xx series, and GeForce 700 series were released in 2013 (OK, GTX 750 was midrange at that time).

The numbers are milliseconds of encoding latency per video frame.

In 2013 NVIDIA card was already capable to do NVENC real-time hardware encoding of video content in 4K resolution 60 frames per second, and four years later RX 570 was released with a significantly less powerful encoder.

Encoder of GTX 1650 is much slower compared to GTX 1080 Ti (application and data to come later) but it is still powerful enough to cover a wide range of video streams including quite intensive in computation and bandwidth.

GTX 750 vs. GTX 1650 comparison also shows that even though the two belong to so much different microarchitectures (Kepler and Turing respectively, and there were Maxwell and Pascal between them), they are not in direct relationship that much newer is superior than the older in every way. When it comes to real-time performance the vendors design stuff to be just good enough.