Previously on the topic:

We have the DMO filter project compilable and registered with the system and it is right time to start putting code in that allows connecting the filter to other DirectShow filters, such as video capture or video file source on the input and video renderer on the output.

IMediaObject implementation includes the following groups of functions:

- object capabilities:

STDMETHOD(GetStreamCount)(DWORD* pnInputStreamCount, DWORD* pnOutputStreamCount) STDMETHOD(GetInputStreamInfo)(DWORD nInputStreamIndex, DWORD* pnFlags) STDMETHOD(GetOutputStreamInfo)(DWORD nOutputStreamIndex, DWORD* pnFlags) STDMETHOD(GetInputType)(DWORD nInputStreamIndex, DWORD nTypeIndex, DMO_MEDIA_TYPE* pMediaType) STDMETHOD(GetOutputType)(DWORD nOutputStreamIndex, DWORD nTypeIndex, DMO_MEDIA_TYPE* pMediaType)

- current media types:

STDMETHOD(SetInputType)(DWORD nInputStreamIndex, const DMO_MEDIA_TYPE* pMediaType, DWORD nFlags) STDMETHOD(SetOutputType)(DWORD nOutputStreamIndex, const DMO_MEDIA_TYPE* pMediaType, DWORD nFlags) STDMETHOD(GetInputCurrentType)(DWORD nInputStreamIndex, DMO_MEDIA_TYPE* pMediaType) STDMETHOD(GetOutputCurrentType)(DWORD nOutputStreamIndex, DMO_MEDIA_TYPE* pMediaType) STDMETHOD(GetInputSizeInfo)(DWORD nInputStreamIndex, DWORD* pnBufferSize, DWORD* pnMaximalLookAheadBufferSize, DWORD* pnAlignment) STDMETHOD(GetOutputSizeInfo)(DWORD nOutputStreamIndex, DWORD* pnBufferSize, DWORD* pnAlignment)

- streaming:

STDMETHOD(GetInputMaxLatency)(DWORD nInputStreamIndex, REFERENCE_TIME* pnMaximalLatency) STDMETHOD(SetInputMaxLatency)(DWORD nInputStreamIndex, REFERENCE_TIME nMaximalLatency) STDMETHOD(Flush)() STDMETHOD(Discontinuity)(DWORD nInputStreamIndex) STDMETHOD(AllocateStreamingResources)()

- data processing:

STDMETHOD(GetInputStatus)(DWORD nInputStreamIndex, DWORD* pnFlags) STDMETHOD(Lock)(LONG bLock) STDMETHOD(ProcessInput)(DWORD nInputStreamIndex, IMediaBuffer* pMediaBuffer, DWORD nFlags, REFERENCE_TIME nTime, REFERENCE_TIME nLength) STDMETHOD(ProcessOutput)(DWORD nFlags, DWORD nOutputBufferCount, DMO_OUTPUT_DATA_BUFFER* pOutputBuffers, DWORD* pnStatus)

For a very basic filter/DMO we will need to implement:

- GetStreamCount to indicate number of streams and number of pins on the corresponding filter

- GetInputStreamInfo and GetInputType to indicate acceptable input media type

- GetOutputStreamInfo and GetOutputType to indicate acceptable output media type; we will be ready to suggest output media type as soon as input media type is already agreed since media types should match

- SetInputType, SetOutputType, GetInputCurrentType, GetOutputCurrentType are simple get/put accessors with a check on setting current media type to be used

- GetInputSizeInfo and GetOutputSizeInfo will indicate buffer requirements

- GetInputStatus and ProcessInput will deal with accepting input data

- ProcessOutput is the method to actually perform brightness and contrast conversion

The object will have one input and one output pin with similar fixed side buffers on both ends – the simplest case possible.

Starting with GetInputType method we are dealing with DMO_MEDIA_TYPE structure, which describes format of the data used. It is a struct member twin of DirectShow AM_MEDIA_TYPE with DMO API management functions Mo*MediaType. For easy and reliable manipulation with this type we will need a ATL/WTL-like wrapper class “template < BOOL t_bManaged > class CDmoMediaTypeT”, which will take care of allocating and freeing the media types.

m_pInputMediaType and m_pOutputMediaType variables will hold media types accepted and agreed for object streams (and thus, filter pins). m_DataCriticalSection is a critical section to ensure thread safe operation.

ProcessInput method will be receiving input buffers for further processing, however this method is not expected to write any outputs. The filter/DMO is expected to pre-process the input and copy save pre-processing outputs in member variables or private buffers. Or instead, the filter can leave a reference to the input buffer and perform the entire processing in the following ProcessOutput call.

Other methods SetInputType, SetOutputType, Flush, Discontinuity and GetInputStatus are also dependent on current input status, so to ease further life we are making CInput class to hold all input data provided with ProcessInput call until required by ProcessOutput and other implementation methods. m_Input member variable will hold latest input buffer.

ProcessOutput is the last method to implement and it has the real data processing. The method is called when both streams/pins are connected, media types agreed and the graph is not stopped. At the moment of the call we should have input data already available through a reference to IMediaBuffer held by m_Input variable and received through ProcessInput call. ProcessOutput is to full output buffer with processed data.

Initially we decided to use YUY2 pixel format for input and output data. The choice of YUV format is stipulated by ease of processing in YUV color space. Brightness and contrast correction affects only Y component of the pixel:

The following equation summarizes the steps described in the previous paragraph. C is the contrast value and B is the brightness value.

Y’ = ((Y – 16) x C) + B + 16

YUY2 format has pixels united into macropixel structure, one macropixel for two horizontally neighboring pixels:

That is, input and output buffers are arrays of macropixels, where we need to update Y component using brightness and contrast correction coefficients. At the very moment we define constant member variables m_nBrightness and m_nContrast and initialize them to predefeined values of -0x0010 and 0x2000 respectively. Let brightness value range be -0x00FF (unbright) through 0x00FF (bright), with a value of 0x0000 leaving original brightness intact. The contrast value range will be 0x0000 (full fade out) through 0xFFFF, with a value of 0x4000 leaving original contrast intact. So taken constants will slightly decrease brightness and apply 50%. contrast reduction. The actual correction for one Y value is performed by function Adjust.

It is important to support non-standard video strides in order to be compatible to Video Mixing Renderer Filter. Video Mixing Renderer Filter can request an image with extended strides by providing respective media type on the output pin of the DMO Wrapper Filter, which is passed to the DMO output stream. ProcessOutput should be prepared to the changed media type and extended strides by checking biWidth field of the BITMAPINFOHEADER structure embedded into media type.

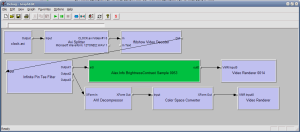

Once the processing is ready, we can easily check the operation using Infinite Pin Tee Filter duplicating video stream into two streams and rendering the first through designed filter and rendering the other (original) stream as is:

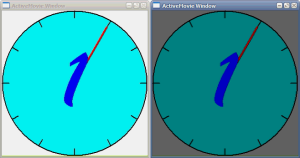

Once the graph is started, video renderers pop up their windows:

Source code: DmoBrightnessContrastSample.02.zip (note that Release build binary is included)

Additional notes:

- YUV Formats

- Processing in the 8-bit YUV Color Space

- Brightness and Contrast

- Using the Video Mixing Renderer

- BITMAPINFOHEADER Structure – see Remarks on calculating stride

Continued by: