Some time ago there were some pictures explaining performance and other properties of software H.264 encoder (x264). At this time, it is a turn of hardware H.264 encoders and more to that, two of them and side by side. Both encoders are nothing new: Intel® Quick Sync Video H.264 Encoder and NVIDIA H.264 Encoder already have been around for a while. Some would say it is already time for H.265 encoders.

Either way, on my test machine both encoders are available without additionally installed software (that is, no need for Intel Media SDK, Nvidia NVENC, redistributable files etc.). Out of the box, Windows 10 offers stock software only encoder, and hardware encoders in form factor of Media Foundation Transform (MFT).

Environment:

- OS: Windows 10 Pro

- CPU: Intel i7-4790

- Video Adapter 1: Intel HD Graphics 4600 (on-board, not connected to monitors)

- Video Adapter 2: NVIDIA GeForce GTX 750

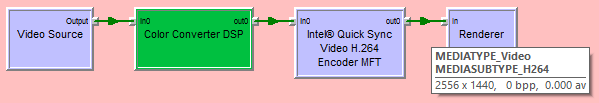

It is not convenient or fun to do things with Media Foundation, but good news is that Media Foundation components are well-separable. A wrapper over MFT that converts them into DirectShow filters, make them available to DirectShow where it is already way easier to run various test runs. The pictures below show metrics for encoder defaults (bitrate, profiles and many other options that create a great deal of encoding modes). Still the pictures do show that both encoders are well usable for many scenarios including HD processing, simultaneous data processing etc.

Test runs are as simple as taking reference video source signal of different properties, pushing it through encoder filter and either writing to a file (to inspect the footage) or to Null Renderer Filter to measure performance.

Intel® Quick Sync Video H.264 Encoder produces files like these: 720×480.mp4, 2556×1440.mp4, which are of decent quality (with respect to low bitrate and “hard to handle” background changes). NVIDIA H.264 Encoder produces somewhat better output supposedly by choosing higher bitrate. Either way, both encoders have a number of ways to fine tune the encoding process. Not just bitrate, profile, GOP length, B frame settings but even more sophisticated parameters.

Intel® Quick Sync Video H.264 Encoder MFT

CODECAPI_AVEncCommonRateControlMode: VT_UI4 0, default VT_UI4 0, modifiable // eAVEncCommonRateControlMode_CBR = 0

CODECAPI_AVEncCommonQuality: minimal VT_UI4 0, maximal VT_EMPTY, step VT_EMPTY

CODECAPI_AVEncCommonBufferSize: VT_UI4 3131961357, default VT_UI4 0, modifiable

CODECAPI_AVEncCommonMaxBitRate: default VT_UI4 0

CODECAPI_AVEncCommonMeanBitRate: VT_UI4 3131961357, default VT_UI4 2222000, modifiable

CODECAPI_AVEncCommonQualityVsSpeed: VT_UI4 50, default VT_UI4 50, modifiable

CODECAPI_AVEncH264CABACEnable: modifiable

CODECAPI_AVEncMPVDefaultBPictureCount: VT_UI4 0, default VT_UI4 0, modifiable

CODECAPI_AVEncMPVGOPSize: VT_UI4 128, default VT_UI4 128, modifiable

CODECAPI_AVEncVideoEncodeQP:

CODECAPI_AVEncVideoForceKeyFrame: VT_UI4 0, default VT_UI4 0, modifiable

CODECAPI_AVLowLatencyMode: VT_BOOL 0, default VT_BOOL 0, modifiable

CODECAPI_AVEncVideoLTRBufferControl: VT_UI4 65536, values { VT_UI4 65536, VT_UI4 65537, VT_UI4 65538, VT_UI4 65539, VT_UI4 65540, VT_UI4 65541, VT_UI4 65542, VT_UI4 65543, VT_UI4 65544, VT_UI4 65545, VT_UI4 65546, VT_UI4 65547, VT_UI4 65548, VT_UI4 65549, VT_UI4 65550, VT_UI4 65551, VT_UI4 65552 }, modifiable

CODECAPI_AVEncVideoMarkLTRFrame:

CODECAPI_AVEncVideoUseLTRFrame:

CODECAPI_AVEncVideoEncodeFrameTypeQP: default VT_UI8 111670853658, minimal VT_UI8 0, maximal VT_UI8 219046674483, step VT_UI8 1

CODECAPI_AVEncSliceControlMode: VT_UI4 0, default VT_UI4 2, minimal VT_UI4 2, maximal VT_UI4 2, step VT_UI4 0, modifiable

CODECAPI_AVEncSliceControlSize: VT_UI4 0, minimal VT_UI4 0, maximal VT_UI4 8160, step VT_UI4 1, modifiable

CODECAPI_AVEncVideoMaxNumRefFrame: minimal VT_UI4 0, maximal VT_UI4 16, step VT_UI4 1, modifiable

CODECAPI_AVEncVideoTemporalLayerCount: default VT_UI4 1, minimal VT_UI4 1, maximal VT_UI4 3, step VT_UI4 1, modifiable

CODECAPI_AVEncMPVDefaultBPictureCount: VT_UI4 0, default VT_UI4 0, modifiable

NVIDIA H.264 Encoder MFT

CODECAPI_AVEncCommonRateControlMode: VT_UI4 0

CODECAPI_AVEncCommonQuality: VT_UI4 65

CODECAPI_AVEncCommonBufferSize: VT_UI4 8923353

CODECAPI_AVEncCommonMaxBitRate: VT_UI4 8923353

CODECAPI_AVEncCommonMeanBitRate: VT_UI4 2974451

CODECAPI_AVEncCommonQualityVsSpeed: VT_UI4 33

CODECAPI_AVEncH264CABACEnable: VT_BOOL -1

CODECAPI_AVEncMPVGOPSize: VT_UI4 50

CODECAPI_AVEncVideoEncodeQP: VT_UI8 26

CODECAPI_AVEncVideoForceKeyFrame:

CODECAPI_AVEncVideoMinQP: VT_UI4 0, minimal VT_UI4 0, maximal VT_UI4 51, step VT_UI4 1

CODECAPI_AVLowLatencyMode: VT_BOOL 0

CODECAPI_AVEncVideoLTRBufferControl: VT_UI4 0, values { VT_I4 65537, VT_I4 65538 }

CODECAPI_AVEncVideoMarkLTRFrame:

CODECAPI_AVEncVideoUseLTRFrame:

CODECAPI_AVEncVideoEncodeFrameTypeQP: VT_UI8 111670853658

CODECAPI_AVEncSliceControlMode: VT_UI4 2, minimal VT_UI4 0, maximal VT_UI4 2, step VT_UI4 1

CODECAPI_AVEncSliceControlSize: VT_UI4 0, minimal VT_UI4 0, maximal VT_UI4 3, step VT_UI4 1

CODECAPI_AVEncVideoMaxNumRefFrame: VT_UI4 1, minimal VT_UI4 0, maximal VT_UI4 16, step VT_UI4 1

CODECAPI_AVEncVideoMeanAbsoluteDifference: VT_UI4 0

CODECAPI_AVEncVideoMaxQP: VT_UI4 51, minimal VT_UI4 0, maximal VT_UI4 51, step VT_UI4 1

CODECAPI_AVEncVideoROIEnabled: VT_UI4 0

CODECAPI_AVEncVideoTemporalLayerCount: minimal VT_UI4 1, maximal VT_UI4 3, step VT_UI4 1

Important property of hardware encoder is that even that it does consume some of CPU time, the most of the complexity is offloaded to video hardware. In all single stream test runs, the eight-core CPU was loaded not more than 30% including time required to synthesize the image using WIC and Direct2D and convert it to YUV format using CPU. That is, offloading video encoding to GPU is a convenient way to free CPU for real time video processing applications.

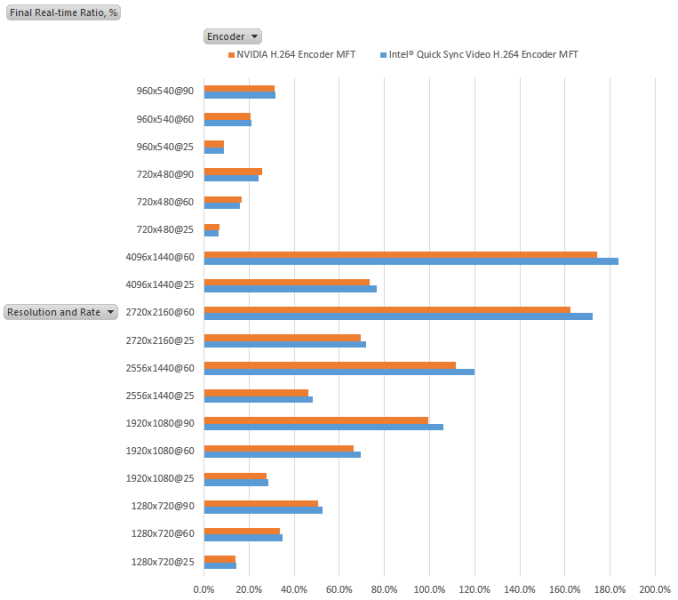

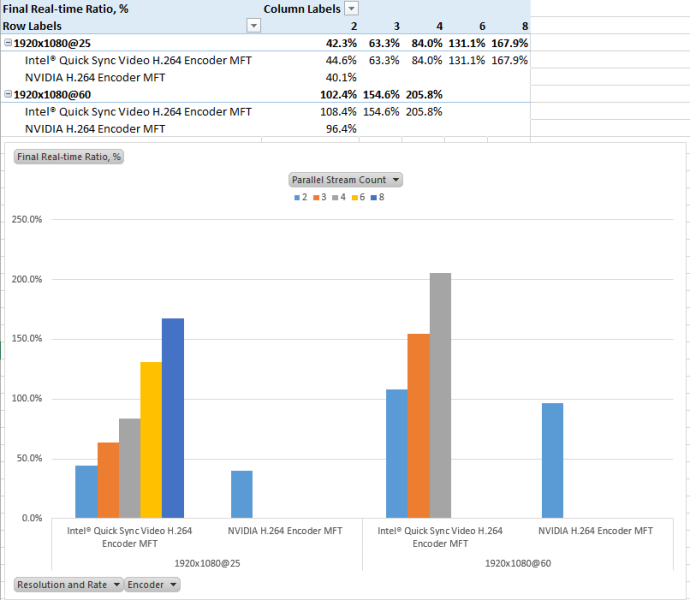

I was mostly interested in how the encoders are in terms of being able to process real time data, esp. so that they are applied to record lengthy sessions. Both encoders appear to be fast enough to crack 1920×1080 HD video at frame rates up to 60 and higher. The test did encoding at highest rate possible and 100% number on the charts corresponds to situation that it took one second to synthesize and encode one second of video no matter what effective CPU/GPU load is. That is, values less than 100% indicate ability to encode video content in real time right away.

Basically, the numbers show that both encoders are fast enough to reliably encode 1080p60 stream.

Looking at it from another standpoint of being able to process two or more H.264 encoding sessions at once, encoder from NVidia has an important limitation of two sessions per system (supposedly related thread – for this or another reason test run with three streams fails).

Both encoders are hardly suitable for reliable encoding of two 1080p60 streams simultaneously (or perhaps some fine tuning might make things faster by choosing appropriate encoding mode). However both look fine for encoding 1080p and lower resolution stream. Clearly, Intel’s encoder can be used to encoder multiple low resolution streams in parallel or mix real time encoding with background encoding (provided that background encoding is throttled to let the real time stream run fast enough). If otherwise real-time encoding is not necessary, both encoders can do the job as well, and with Nvidia the application needs to make sure that only two sessions are running simultaneously, Intel’s encoder can be used in a more flexible way.

Also, Nvidia’s encoder is slightly faster, however Intel’s allow 3+ concurrently encoded stream and also allows to supply RGB input directly without converting to YUV.

There is also Intel® Hardware H265 Encoder MFT available for H.265 encoding, but this is going to be another story some time later.

From time to time I am asked about the filters that wrap hardware encoders for video.

As of now the wrapper filters I developed are not available as standalone filters. I am also not aware of any other wrapper available on Internet, even though the task is straightforward – Media Foundation MFT to be wrapped as a filter. So I suppose those interested are supposed to develop their own wrappers.

The MFTs from Intel, NVIDIA, AMD are all similar MFTs and if one develops a wrapper which works with either of the three, chances are high the wrapper will work with the other with minimal update, if any. One does not need to develop a universal wrapper, it is good to start with the assumption that hardware video encoder is a single input single output asynchronous MFT taking NV12 and possibly also ARGB32 input, and producing H264 output.

One of the important properties of the MFTs is that they are Direct3D aware. DirectShow does not define a widely accepted method to use Direct3D-enabled media samples. So wrapper developer will need to define his own method to pass video memory backed media samples anyway, or be satisfied with regular DirectShow media samples and allocators, which is still okay.