Fooling Around

// Oprogramowanie Roman Ryltsov

Shadertoy & .NET MAUI CommunityToolkit MediaElement

A side effect from integration of external video signal coming from native code C++ application with a .NET MAUI Blazor app: a reference video source (based on nice Shader Art Coding Introduction shadertoy – an HLSL equivalent can be found in resources of the attached application) is taken into .NET MAUI CommunityToolkit MediaElement control (see also Play Audio and Video in .NET MAUI apps with the new MediaElement).

The application below is a reduced version to simply put the video source into a slim C++ application, see this tiny CompositionVideoDemo application if you are curious how to host a Windows.Media.Core.MediaSource instance in a C++ application.

A codec for The Imaging Source cameras and [discontinued] IC Capture 2.5 software

2025-03-25 Update: https://iccapturecodecs.alax.info/ hosts a productized version of the codec, with demonstration/free trial option.

Truly advanced industrial cameras by The Imaging Source are provided with image acquisition software, End User Software is a free IC Capture application, which ended up with a few problems to resolve.

First of all, “the older” application is a kind of discontinued. A Windows application version 2.5 was on the website as recently as a few weeks ago, but the announcement of release of IC Capture version 4 removed the older application completely.

Another The Imaging Source IC Measure application is renamed into the name of its predecessor IC Capture with a version increment… Now IC Capture 2.5 is almost gone. OK, almost – there are still some traces: https://www-st.theimagingsource.com/en-us/support/download/iccapture-2.5.1557.4007/

The question is this: the cameras are good enough to capture video with 12-bit color depth, e.g. 33U series. Is it possible to record the original video stream in certain popular video format for use in other applications?

Under “AVI Files and Codecs Support” the new application does not seem to offer AVI support anymore, and offers recording to MP4 files with H.264 or H.265 encoding. You could give it a try and record 4000×3000@20 with 12-bit wide dynamic range into one of these formats, hopefully without frame dropping.

The previous IC Capture 2.5 application in Windows had better integration options and did offer AVI recording with interoperability with third party software. It was still quite a challenge to find a suitable codec, yet it is possible now with a specialized GoPro CineForm codec designed to work with IC Capture 2.5:

- the codec directly accepts The Imaging Source driver’s RGB64 format (original 12-bit color depth from camera without reduction to 8 bits) with WDR (wide dynamic range) option tuned on video

- the codec retains 12-bit precision in the produced output (even though the output bitrate is pretty high!)

- the codec does its best to not lose input frames, and uses the most of CPU with parallel processing, in order to save as much as possible from the original data acquisition; to give some estimate, an Intel i7 CPU of 12th generation or newer can handle the compression of WDR 4000×3000@20 video stream and enable its offline post-processing where all the data is securely recorded

Essentially, the codec gives fine tuning to discontinued IC Capture 2.5 extending its recording abilities so that they exceed those of current IC Capture 4 software in Windows… The produced files are nicely accepted into software like DaVinci Resolve, or good old Media Player Classic Home Cinema.

The download for the pre-release of the codec is below. Requirements:

- Windows 10/11: minimal version is not identified, the software should be well compatible with a few last versions of Windows

- 64-bit Windows and 64-bit of IC Capture 2.5 (x64): there is no 32-bit build as making no much sense; IC Capture 2.5 installs both Win32 and x64 variants of the application, please use x64 and there in the codec choice UI you will be able to see additional codec option

- unregistered codec will overlay “DEMO CODEC” text, the overlay goes away with addition of valid license token for the codec (to be described later, or please get in touch for a quote)

- the codec is designed to work only with IC Capture 2.5 x64 software

Downloads are at: https://iccapturecodecs.alax.info/

Enhancing Video Capture: Bridging the Gap Between Hardware and Software

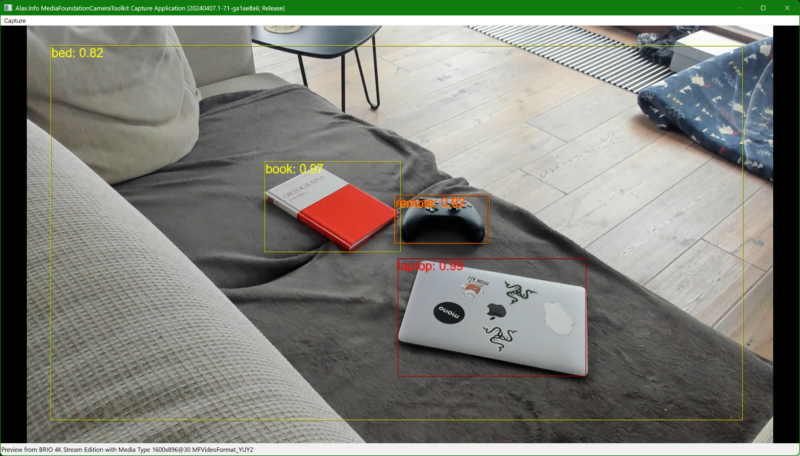

Demo: Webcam with YOLOv4 object detection via Microsoft Media Foundation and DirectML

As we see announcements for more and more powerful NPUs and AI support in newer consumer hardware, here is a new demo with Artificial Intelligence attached to a good old sample camera application based on the Microsoft Media Foundation Capture Engine API.

This demo is a blend of several technologies: Media Foundation, Direct3D 11, Direct3D 12, DirectML (of Windows AI), and Direct2D, all at once!

The video data never leaves the video hardware. We have the video captured via Media Foundation, internally processed with the Windows Camera Frame Server service, and then shared through the Capture Engine API (this perhaps still does not really work as “shared access” but we are used to this). The data then is converted from Direct3D 11 to Direct3D 12 and, further, to a DirectML tensor. From there, we run the YOLOv4 model (see DirectML YOLOv4 sample) on the data while the video smoothly goes to preview presentation. As soon as the Machine Learning model produces predictions, we pass the data to a Direct2D overlay, which attaches a mask onto the video flow going to the presentation.

The compiled application’s only dependency is the yolov4.weights file (you need to download it separately and place in the same directory as the executable, there is an alternative download link); the rest is the Windows API and the application itself. As the work is handled by the GPU, you will see Task Manager showing GPU load close to 100% while CPU load is minimal.

The model is trained on 608 by 608 pixel images, and so is the model input and rescaling. That is, the resolution of the camera video does not matter much, except that the overlay mask is more accurate with higher resolution video—the overlay is burnt into the video stream itself. To show the recent progress in hardware capabilities, here are some new numbers:

- Intel(R) UHD Graphics 770 integrated in the Intel® Core™ i9-13900K CPU achieves around 2.5 fps for real-time video processing.

- AMD Radeon 780M Graphics integrated in the AMD Ryzen 9 7940HS CPU runs the processing up to four times faster, achieving around 8.0 fps.

- [2025-04-04 Update] NVIDIA GeForce RTX 2060 SUPER is capable to process video at around 24 fps.

Intel’s CPU is still the good one but its video is not so, and it’s GPU at work now. The AMD Ryzen 9 7940HS includes AMD’s dedicated AI XDNA Technology. The NPU performance is rated at up to 10 TOPS (Tera Operations Per Second), upcoming Copilot+ PCs are expected to have 40+ TOPS, so the new hardware should be a good fit for this class of applications.

Have fun!

Minimal Requirements:

- Windows 11 x64 — DirectML might run on Windows 10 as well, but for the simplicity of the demo it requires Windows 11; Windows 11 ARM64 is of certain interest as well, but I don’t have hardware at hand to check)

- DirectX 12 compatible video adapter, which is used across APIs — for the simplicity of the demo there is no option to offload to another GPU or NPU

- The .weights file downloaded and placed as mentioned, besides the application in the archive above

IBC 2024 Accelerator Project: Scalable Ultra-Low Latency Streaming for Premium Sports (Accelerator Session)

IBC 2024 Accelerator Project: Scalable Ultra-Low Latency Streaming for Premium Sports

Something I built on my development machine in order to flash into Android set-top box device for the expo to entertain people for three days in a row… I hope everyone had fun!