New series in demonstrations of what one can squeeze out of Windows Media Foundation Capture Engine API.

This video camera capture demonstration application features a mounted MPEG-DASH (Dynamic Adaptive Streaming over HTTP) server. The concept is straightforward: during video capture, the application takes the video feed and compresses it in H.264/AVC format using GPU hardware-assisted encoding. It then retains approximately two minutes of data in memory and generates an MPEG-DASH-compatible view of this data. The view follows the dynamic manifest format specified by ISO/IEC 23009-1. The entire system is integrated with the HTTP Server API and accessible over the network.

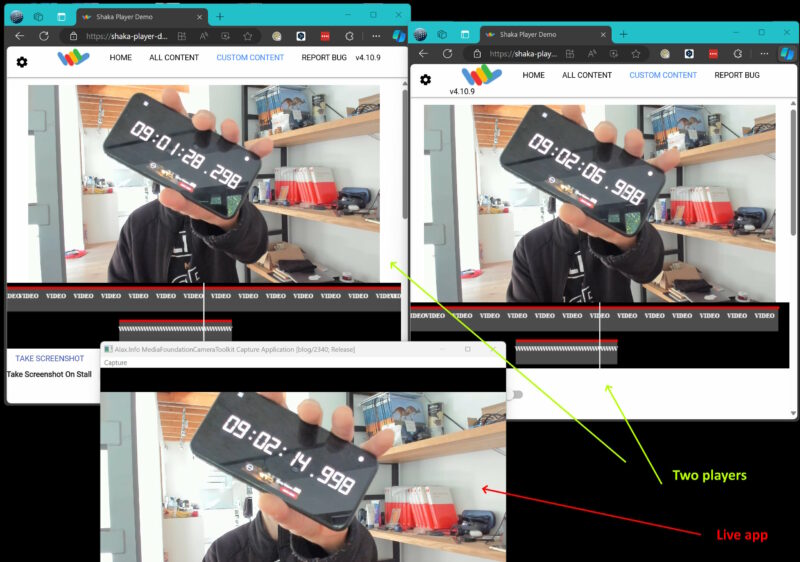

Since it is pretty standard streaming media (just maybe without adaptive bitrate capability: the broadcasting takes place in just one quality) the signal can be played back with something like Google Shaka Player. As the application keeps last two minutes of data, you can rewind web player back to see yourselves in past… And then fast forward yourselves into future once again.

Just Windows platform APIs, Microsoft Windows Media Foundation and C++ code, the only external library is Windows Implementation Libraries (WIL) if this classifies at all as an external library. No FFmpeg, no GStreamer and such. No curl, no libhttpserver and whatever web servers are. That is, as simple as this:

auto const ToSeconds = [] (NanoDuration const& Value, double Multiplier = 1.0) -> std::wstring

{

return Format(L"PT%.2fS", Multiplier * Value.count() / 1E7);

};

Element Mpd(L"MPD", // ISO/IEC 23009-1:2019; Table 3 — Semantics of MPD element; 5.3.1.2 Semantics

{

{ L"xmlns", L"urn:mpeg:dash:schema:mpd:2011" },

//{ L"xmlns", L"xsi", L"http://www.w3.org/2001/XMLSchema-instance" },

//{ L"xsi", L"schemaLocation", L"urn:mpeg:dash:schema:mpd:2011 http://standards.iso.org/ittf/PubliclyAvailableStandards/MPEG-DASH_schema_files/DASH-MPD.xsd" },

{ L"profiles", L"urn:mpeg:dash:profile:isoff-live:2011" },

{ L"type", L"dynamic" },

{ L"maxSegmentDuration", ToSeconds(2s) },

{ L"minBufferTime", ToSeconds(4s) },

{ L"minimumUpdatePeriod", ToSeconds(1s) },

{ L"suggestedPresentationDelay", ToSeconds(3s) },

{ L"availabilityStartTime", FormatDateTime(BaseLiveTime) },

//{ L"publishTime", FormatDateTime(BaseLiveTime) },

});The video is compressed once as video capture process goes, and the application is integrated with native HTTP web server, so the whole thing is pretty scalable: connect multiple clients and this is fine, the application mostly provides you a view into H.264/AVC data temporarily kept in memory within the retention window. For the same reason resource consumption of the solution is what you expect it to be. The playback clients do not evenhave to play the same historical part of the content:

So okay well, this demo opens path to next steps once in a while: audio, DRM, HLS version, low latency variants such as LL-HLS, MPEG-DASH segment sequence representations.

So just have the webcam video capture application working, and open MPEG-DASH manifest http://localhost/MediaFoundationCameraToolkit/Capture/manifest.mpd with https://shaka-player-demo.appspot.com/ using “Custom Content” option.

Note that the application requires administrative elevated access in order to use HTTP Server API capabilities (AFAIR it is possible to make it another way, but you don’t need this this time).

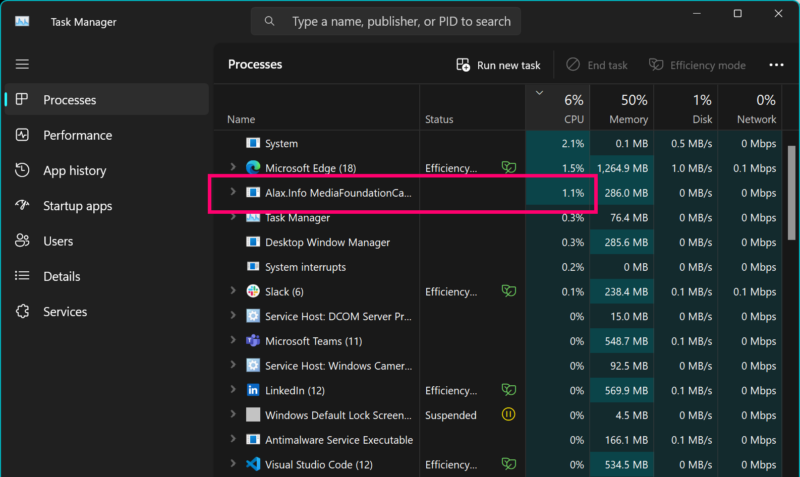

The application doing video capture, rendering the 1920×1080@30 stream to the user interface, teeing signal into additional processing, doing hardware assisted video encoding, packaging, serving MPEG-DASH content is not taking too many resources: it is just something that makes good sense.

Oh and one can also use standard C# tooling to display this sort of video signal, here we go with standard PlayReady C# Sample with a XAML MediaElement inside: