Video GPU vendors (AMD, Intel, NVIDIA) ship their hardware with drivers, which in turn provide hardware-assisted decoder for JPEG (also known as MJPG and MJPEG. and Motion JPEG) video in form-factor of a Media Foundation Transform (MFT).

JPEG is not included in DirectX Video Acceleration (DXVA) 2.0 specification, however hardware carries implementation for the decoder. A separate additional MFT is a natural way to provide OS integration.

AMD’s decoder is named “AMD MFT MJPEG Decoder” and looks weird from the start. It is marked as MFT_ENUM_FLAG_HARDWARE, which is good but this normally assumes that the MFT is also MFT_ENUM_FLAG_ASYNCMFT, but the MFT lacks the markup. AMD’s another decoder MFT “AMD D3D11 Hardware MFT Playback Decoder” has the same problem though.

Hardware MFTs must use the new asynchronous processing model…

Presumably the MFT has the behavior of normal asynchronous MFT, however as long as this markup does not have side effects with Microsoft’s software, AMD does not care for this confusion to others.

Furthermore, the registration information for this decoder suggests that it can handle decoding into MFVideoFormat_NV12 video format, and sadly it is again inaccurate promise. Despite the supposed claim, the capability is missing and Microsoft’s Video Processor MFT jumps in as needed to satisfy such format conversion.

These were just minor things, more or less easy to tolerate. However, a rule of thumb is that Media Foundation glue layer provided by technology partners such as GPU vendors is only satisfying minimal certification requirements, and beyond that it causes suffering and pain to anyone who wants to use it in real world scenarios.

AMD’s take on making developers feel miserable is the way how hardware-assisted JPEG decoding actually takes place.

The thread 0xc880 has exited with code 0 (0x0).

The thread 0x593c has exited with code 0 (0x0).

The thread 0xa10 has exited with code 0 (0x0).

The thread 0x92c4 has exited with code 0 (0x0).

The thread 0x9c14 has exited with code 0 (0x0).

The thread 0xa094 has exited with code 0 (0x0).

The thread 0x609c has exited with code 0 (0x0).

The thread 0x47f8 has exited with code 0 (0x0).

The thread 0xe1ec has exited with code 0 (0x0).

The thread 0x6cd4 has exited with code 0 (0x0).

The thread 0x21f4 has exited with code 0 (0x0).

The thread 0xd8f8 has exited with code 0 (0x0).

The thread 0xf80 has exited with code 0 (0x0).

The thread 0x8a90 has exited with code 0 (0x0).

The thread 0x103a4 has exited with code 0 (0x0).

The thread 0xa16c has exited with code 0 (0x0).

The thread 0x6754 has exited with code 0 (0x0).

The thread 0x9054 has exited with code 0 (0x0).

The thread 0x9fe4 has exited with code 0 (0x0).

The thread 0x12360 has exited with code 0 (0x0).

The thread 0x31f8 has exited with code 0 (0x0).

The thread 0x3214 has exited with code 0 (0x0).

The thread 0x7968 has exited with code 0 (0x0).

The thread 0xbe84 has exited with code 0 (0x0).

The thread 0x11720 has exited with code 0 (0x0).

The thread 0xde10 has exited with code 0 (0x0).

The thread 0x5848 has exited with code 0 (0x0).

The thread 0x107fc has exited with code 0 (0x0).

The thread 0x6e04 has exited with code 0 (0x0).

The thread 0x6e90 has exited with code 0 (0x0).

The thread 0x2b18 has exited with code 0 (0x0).

The thread 0xa8c0 has exited with code 0 (0x0).

The thread 0xbd08 has exited with code 0 (0x0).

The thread 0x1262c has exited with code 0 (0x0).

The thread 0x12140 has exited with code 0 (0x0).

The thread 0x8044 has exited with code 0 (0x0).

The thread 0x6208 has exited with code 0 (0x0).

The thread 0x83f8 has exited with code 0 (0x0).

The thread 0x10734 has exited with code 0 (0x0).

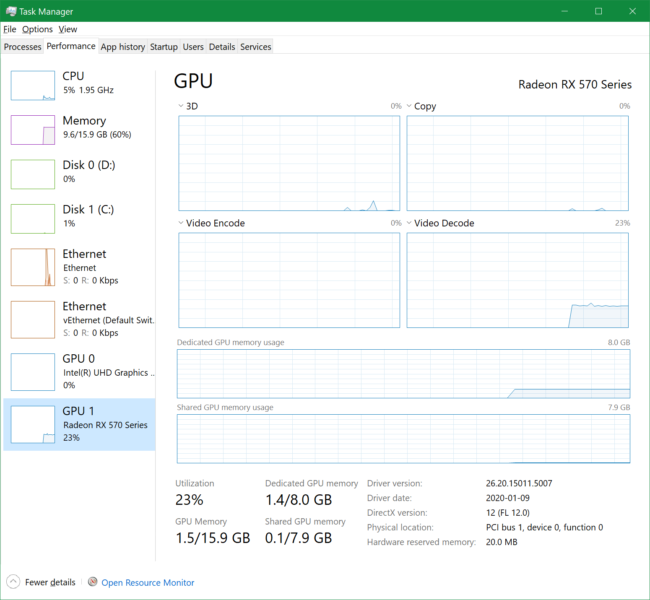

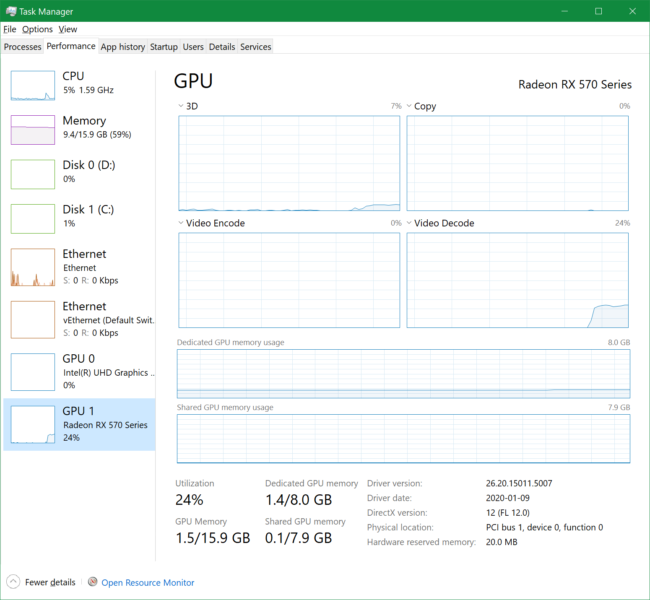

For whatever reason they create a thread for every processed video frame or close to this… Resource utilization and performance is affected respectively. Imagine you are processing a video feed from high frame rate camera? The decoder itself, including its AMF runtime overhead, decodes images in a millisecond or less but they spoiled it with absurd threading topped with other bugs.

However, AMD video cards still have the hardware implementation of the codec, and this capability is also exposed via their AMF SDK.

AMFVideoDecoderUVD_MJPEG

Acceleration Type: AMF_ACCEL_HARDWARE

AMF_VIDEO_DECODER_CAP_NUM_OF_STREAMS: 16

CodecId AMF_VARIANT_INT64 7

DPBSize AMF_VARIANT_INT64 1

NumOfStreams AMF_VARIANT_INT64 16

Input

Width Range: 32 - 7,680

Height Range: 32 - 4,320

Vertical Alignment: 32

Format Count: 0

Memory Type Count: 1

Memory Type: AMF_MEMORY_HOST Native

Interlace Support: 1

Output

Width Range: 32 - 7,680

Height Range: 32 - 4,320

Vertical Alignment: 32

Format Count: 4

Format: AMF_SURFACE_YUY2

Format: AMF_SURFACE_NV12 Native

Format: AMF_SURFACE_BGRA

Format: AMF_SURFACE_RGBA

Memory Type Count: 1

Memory Type: AMF_MEMORY_DX11 Native

Interlace Support: 1

I guess they stop harassing developers once they switch from out of the box MFT to SDK interface into their decoder. “AMD MFT MJPEG Decoder” is highly likely just a wrapper over AMF interface, however my guess is that the problematic part is exactly the abandoned wrapper and not the core functionality.