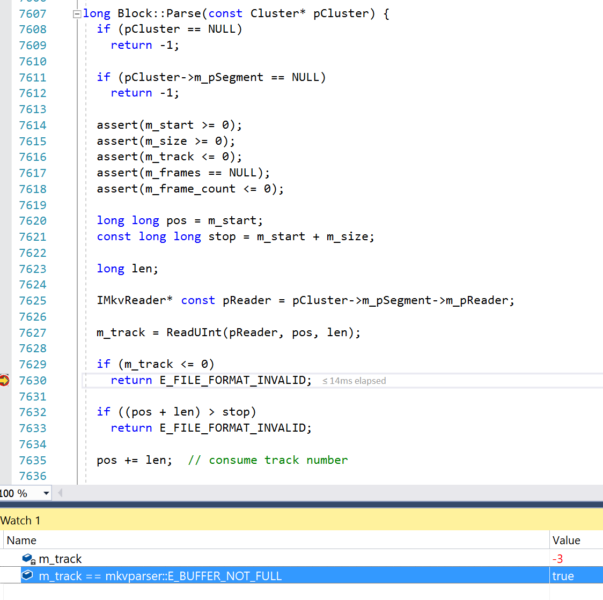

A magic transition from E_BUFFER_NOT_FULL to E_FILE_FORMAT_INVALID in depths of libwebm…

Media.dll!mkvparser::Block::Parse(const mkvparser::Cluster * pCluster) Line 7630 C++

Media.dll!mkvparser::BlockGroup::Parse() Line 7579 C++

Media.dll!mkvparser::Cluster::CreateBlockGroup(__int64 start_offset, __int64 size, __int64 discard_padding) Line 7253 C++

Media.dll!mkvparser::Cluster::CreateBlock(__int64 id, __int64 pos, __int64 size, __int64 discard_padding) Line 7154 C++

Media.dll!mkvparser::Cluster::ParseBlockGroup(__int64 payload_size, __int64 & pos, long & len) Line 6724 C++

Media.dll!mkvparser::Cluster::Parse(__int64 & pos, long & len) Line 6381 C++

Media.dll!mkvparser::Cluster::GetNext(const mkvparser::BlockEntry * pCurr, const mkvparser::BlockEntry * & pNext) Line 7369 C++

Media.dll!WebmLiveMediaSource::HandleData(std::vector,std::allocator > > & AsyncResultVector) Line 757 C++

Media.dll!WebmLiveMediaSource::ReadInvoke(AsyncCallbackT * AsyncCallback, IMFAsyncResult * AsyncResult) Line 1176 C++

Media.dll!AsyncCallbackT::Invoke(IMFAsyncResult * AsyncResult) Line 2344 C++

RTWorkQ.dll!CSerialWorkQueue::QueueItem::ExecuteWorkItem() Unknown

RTWorkQ.dll!CSerialWorkQueue::QueueItem::OnWorkItemAsyncCallback::Invoke() Unknown

RTWorkQ.dll!ThreadPoolWorkCallback() Unknown

ntdll.dll!TppWorkpExecuteCallback() Unknown

ntdll.dll!TppWorkerThread() Unknown

kernel32.dll!BaseThreadInitThunk () Unknown

ntdll.dll!RtlUserThreadStart () Unknown

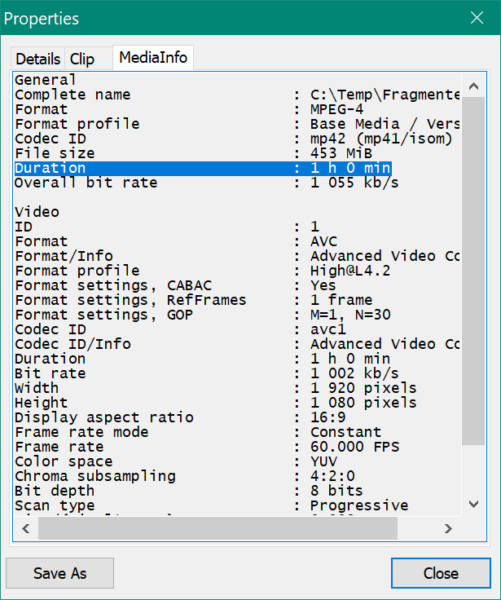

The problem here is that the stream is live and is available in increments in ultra low latency consumption mode. The libwebm library code structure does not suggest it is sufficiently robust for such processing (or maybe it’s good and it’s just one bug? who can tell) even though it apparently have multiple code paths added for live signal. There is no problem to parse a complete file, of course, and then even a retry from the same point succeeds once new data is appended.

It looks like a reasonable workaround here is to check whether we are close to the edge of the stream and temporarily ignore errors like this.

Library parsing performance/efficiency is also a bit questionable. The library is not capable to process incremental reads via mkvparser::IMkvReader. Instead it keeps steppping back all over the parsing process and the live signal source has to keep a bit of processed data because it can be requested once again…

Apparently UWP as a platform has code capable to process this type of data reliably as MediaElement has built-in support for the format in Media Source Extensions (MSE) mode. However, this implementation is limited for internal consumers.