Fooling Around

// Oprogramowanie Roman Ryltsov

ManifestComDependency: Adding/Removing Registration-Free COM dependencies

In one of the earlier posts I mentioned a problem with registration-free COM dependency setup up by Visual Studio as a part of build process. This post is about the tool that offers an alternate solution for the problems, and also gives more:

- standalone, independent from build, addition/removal registration-free COM links

- automation of registration-free COM links for native code builds

Unless one deals with ACTCTX activation contexts programmatically, taking advantage of registration-free COM is essentially a setup of respective side-by-side manifests, either standalone or embedded into executable. The manifests override standard global COM object lookup procedure and point to COM servers directly, in some way similar to the way static links to exported DLL functions work.

To enable, registration-free COM reference COM client needs to prepare respective manifest file (declare an assembly) and list its COM dependencies. The COM dependency list is not just COM server files but rather their COM classes and type libraries. That is, when it comes to automation, the tool needs to go over the COM servers and extract respective identifiers, so that it could properly shape this information and include into manifest XML.

To deal with the challenge, I am using ManifestComDependency tool (no better came to mind when it was created). The command line tool syntax is the following:

ManifestComDependency <path> [options] Options:  * /i[:<assembly-name>] - updates <assemblyIdentity> element replacing it with automatically generated  * /a[:tc] <dependency-path>[|LIBID[;X.Y]] - adds file <dependency-path> dependency, optionally without type library and COM classes reference  * /r <dependency-path> - removes dependency from file <dependency-path>  * /e <export-path> - exports manifest to file <export-path>

The tool opens a file <path> for manifest modification. The file can be a binary file, such as .exe or .dll, in which case the tool will be working with embedded manifest resource. Or, if the path extension is .manifest or .xml, then the file is treated as standalone XML manifest file. Both options are good for manifests associated with .NET builds as well, even though this surely does not need to be managed code development – native COM consumers are okay.

/i option updates <assemblyIdentity> element in the manifest data because it is mandatory and in the same time it might so happen that it is missing or incomplete, which ends up in painful troubleshooting. The tool will name file name, architecture and version information from the binary resource information (product version; the VERSIONINFO resource has to be present).

/a option adds a dependency, defined by either path or registered type library identifier the tool would use to resolve the path. t and c modifiers exclude type library and COM server information respectively. The tool created a file element for the dependency and populates it with COM servers discovered there. Type library is obtained using LoadTypeLib API and COM servers are picked up by a trick with RegOverridePredefKey and friends (e.g. see example in regsvr42 source or GraphStudioNext source – both are excellent sources of code snippets for the curious).

The task of COM server discovery assumes load of the library into process (LoadLibrary API call), which assumes bitness match between the tool and the loaded module. This is where you need both Win32 and x64 builds of the tool: if wrong bitness is detected, the tool restarts its sister build passing respective command line arguments, just like regsvr32 does with the only difference that regsvr32 uses binaries in system32 and syswow64 directories, and my tool looks for -Win32 and -x64 suffixes and the files in the same directory.

/r option removes dependencies by name. It is safe to remove certain dependency before adding it back because addition does not check if dependency already exists.

/e option writes the current version of the manifest in external file, esp. for review and troubleshooting if the actual manifest is embedded.

The order of options is important because they are executed one by one in the order of appearance. Then the result is saved/re-embedded once all tasks are done.

Command line example of re-adding registration-free COM dependencies:

ManifestComDependency-x64.exe BatchExport-x64.exe /i /r Player.dll /r mp4demux.dll /a Player.dll /a mp4demux.dll

This can be set as post-build event in the project settings, or put into batch file or run on-demand.

Download links

- Binaries:

- 32-bit: ManifestComDependency-Win32.exe

- 64-bit: ManifestComDependency-x64.exe

- License: This software is free to use

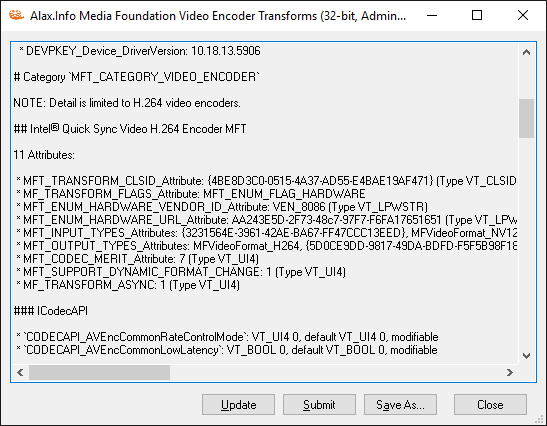

MediaFoundationVideoEncoderTransforms: Detecting support for hardware H.264 video encoders

H.264 (MPEG-4 Part 10 also known as MPEG-4 AVC) video encoding was never packaged into DirectShow filter by Microsoft and instead they offered Media Foundation Transform (MFT) for the job: H.264 Video Encoder. Further development gave us third party video encoders also packaged as MFTs. Hardware backed MFTs became a part of video hardware drivers and nevertheless are part of Media Foundation API, can also be very well used standalone.

Detection of H.264 encoding capabilities? MediaFoundationVideoEncoderTransforms is here to help.

H.264 options include:

- H264 Encoder MFT

- Intel® Quick Sync Video H.264 Encoder MFT

- NVIDIA H.264 Encoder MFT

- AMDh264Encoder

H.265 encoding will apparently be (already is) using the same encoder packaging.

The tools enumerates the transforms and provides details, similar to Enumerating Media Foundation Transforms (MFTs) application (source code available).

# System

[…]

# Display Devices <<– Same as in Device Manager

* AMD Radeon R7 200 Series

* Instance: PCI\VEN_1002&DEV_6610&SUBSYS_22BF1458&REV_00\4&2DB3ECDA&0&0008

* DEVPKEY_Device_Manufacturer: Advanced Micro Devices, Inc.

* DEVPKEY_Device_DriverVersion: 15.201.1151.1008

* Intel(R) HD Graphics 530 <<– Video adapter Intel QSV is available through (if you don’t see Intel video, then maybe it needs to be turned on in BIOS)

* Instance: PCI\VEN_8086&DEV_1912&SUBSYS_D0001458&REV_06\3&11583659&0&10

* DEVPKEY_Device_Manufacturer: Intel Corporation

* DEVPKEY_Device_DriverVersion: 20.19.15.4331# Category `MFT_CATEGORY_VIDEO_ENCODER`

NOTE: Detail is limited to H.264 video encoders.

## Intel® Quick Sync Video H.264 Encoder MFT <<– The guy that does Intel QSV encoding

[…]

## H264 Encoder MFT <<– Stock Microsoft software H.264 implementation, available since Windows 7

[…]

(Other encoders like those from AMD, Nvidia will also be listed)

## Intel® Hardware H265 Encoder MFT <<– Intel also provides now H.265 hardware encoding option

[…]

## H265 Encoder MFT

[…]

Download links

- Binaries:

- 32-bit: MediaFoundationVideoEncoderTransforms-Win32.exe

- 64-bit: MediaFoundationVideoEncoderTransforms-x64.exe

- License: This software is free to use

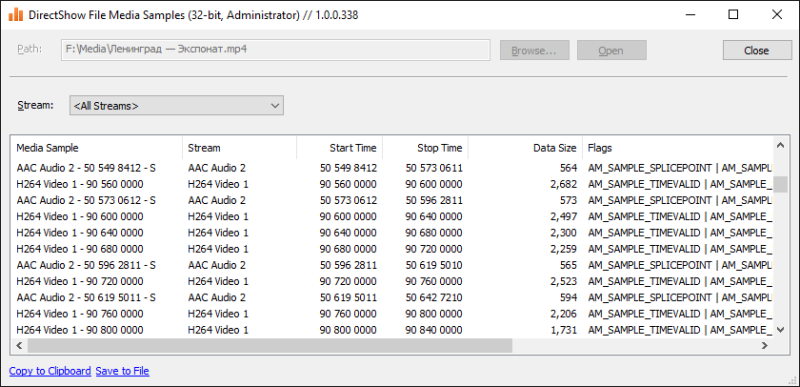

DirectShowFileMediaSamples Update: Command Line Mode

It appears that the tool was never mentioned before (just mentioned in general software list). The application takes a media file on the input and applies respective DirectShow demultiplexer to list individual media samples.

- for MP4 files the application attempts to use GDCL MPEG-4 Demultiplexer first

- it is possible to filter a specific track/stream

- ability to copy data to clipboard or save to file

- drag and drop a file to get it processed

Now the tool has command line mode too:

DirectShowFileMediaSamples-Win32.exe input-path [/no-video] [/no-audio] [output-path]

- /no-video – excludes video tracks

- /no-audio – excludes audio tracks

Default output path is input path with extension renamed to .TSV. If DirectShowSpy is installed, the file also contains filter graph information used (esp. media types).

For example,

D:\>DirectShowFileMediaSamples-Win32.exe “F:\Media\Ленинград — ÐкÑпонат.mp4”

Typical command line use: troubleshooting export/transcoding sessions where on completion you need a textual information about the export to make sure time accuracy of individual samples: start, stop times, gaps etc.

Interactively one can also achieve the same goal using GraphStudioNext‘s built-in Analyzer Filter.

Download links

- Binaries:

- 32-bit: DirectShowFileMediaSamples-Win32.exe

- 64-bit: DirectShowFileMediaSamples-x64.exe

- License: This software is free to use

Fragile MFEnumDeviceSources

As I mentioned in earlier post, Media Foundation video capture capability is internally using undocumented categories with pretty much the same transforms and enumeration applicable to categories documented.

Without documenting it as public contract, MFEnumDeviceSources enumerates transforms, builds standard implementation for IMFMediaSource on top of available transform and… voila! Media Foundation offers “lightweight” API for video/audio capture. Lightweight presumably stands for “of substandard quality” here. It would be lightweight, if they documented the transforms, the enumeration API, make it extensible, decoupled their media source implementation from the transform, documented the internal communication between the two. However, it seems that there has been no progress in this direction since Windows 7.

The implementation is good in terms that it is implementing the documented promises, however if earlier APIs were developer friendly and well-extensible, here it is just minimal implementation without offering too much to people. Even though it could be extensible, it looks like Microsoft simply decided to not spend effort on pushing it to feature rich state, clean and open to developers.

A simple example: it is still possible to register your own transform into undocumented category. MFEnumDeviceSources implementation does see it, picks it up. Not a hardware transform? No go – we only offer media source functionality for hardware transform (actually for no apparent reason, other than possibly to put a stop to custom extensions). Hardware transform? No go – we cannot accept it for another reason, we only offer media source implementation for hardware transforms we “like”. The most confusing part is that rejection of unsupported transform might cause fatal failure in the enumeration loop: the rejection will make other, supported transforms and devices unavailable to applications. I suppose that once the loop encounters unsupported device, it simply forwards the failure upwards invalidating devices already found and yet to be enumerated. It is not the way the API should be developed, but it is okay – the category remains internal and undocumented.

Follow up: mixed parallel H.264 encoding, Intel® Quick Sync Video H.264 Encoder MFT + NVIDIA H.264 Encoder MFT

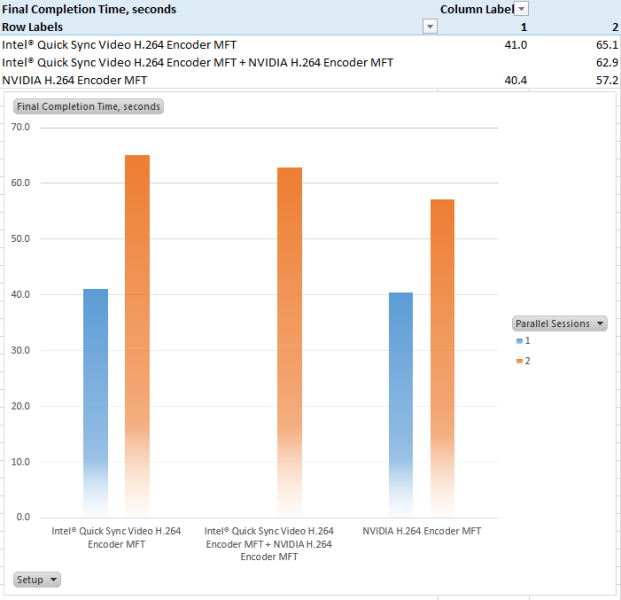

A scenario which was dropped out from previous post is mixed simultaneous encoding using both hardware encoders. Rationale: Intel QSV encoder might exist as a “free” capability of the motherboard (provided with onboard video adapter), The other one might be available with the video adapter intentionally plugged in (including for other reasons, such as to power dual monitor system etc).

From this standpoint, it might be interesting if one can benefit from using of both encoders.

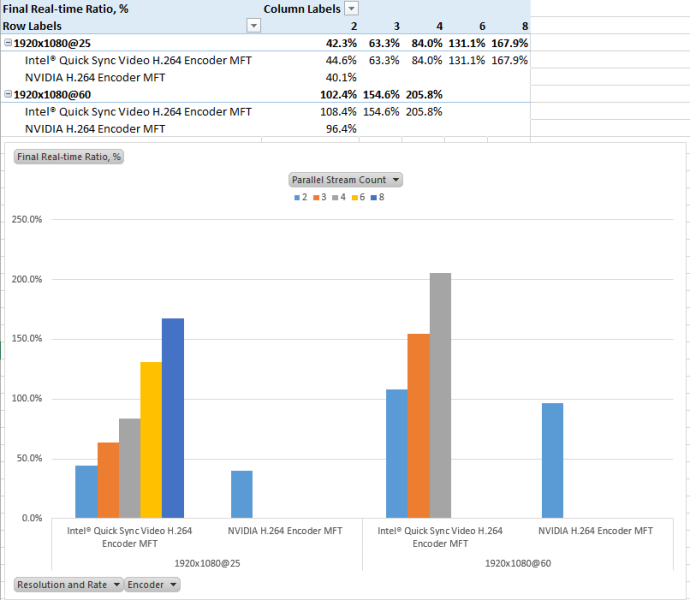

Two filter graphs are set to produce 60 seconds of 1080p60 video as soon as possible, and are started simultaneously. The chart below show completion time, side by side with those for runs of one and two sessions of each encoder separately.

Informational: in single stream runs CPU load was around 30%, two session runs – around 50%, of which the part that synthesizes and converts the video to compatible MFT input format took 5-6% of CPU time overall. Or, if computed against 60 seconds of CPU time of eight core CPU, the synthesis-and-conversion itself consumed <4% CPU time for one stream, and <7% for dual stream runs.

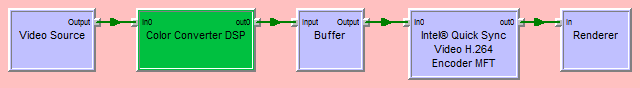

Encoding H.264 video using hardware MFTs

Some time ago there were some pictures explaining performance and other properties of software H.264 encoder (x264). At this time, it is a turn of hardware H.264 encoders and more to that, two of them and side by side. Both encoders are nothing new: Intel® Quick Sync Video H.264 Encoder and NVIDIA H.264 Encoder already have been around for a while. Some would say it is already time for H.265 encoders.

Either way, on my test machine both encoders are available without additionally installed software (that is, no need for Intel Media SDK, Nvidia NVENC, redistributable files etc.). Out of the box, Windows 10 offers stock software only encoder, and hardware encoders in form factor of Media Foundation Transform (MFT).

Environment:

- OS: Windows 10 Pro

- CPU: Intel i7-4790

- Video Adapter 1: Intel HD Graphics 4600 (on-board, not connected to monitors)

- Video Adapter 2: NVIDIA GeForce GTX 750

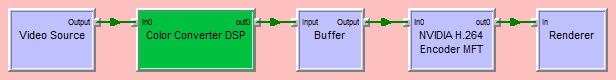

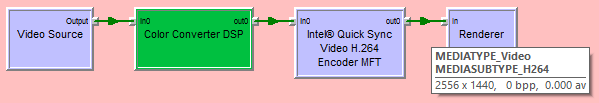

It is not convenient or fun to do things with Media Foundation, but good news is that Media Foundation components are well-separable. A wrapper over MFT that converts them into DirectShow filters, make them available to DirectShow where it is already way easier to run various test runs. The pictures below show metrics for encoder defaults (bitrate, profiles and many other options that create a great deal of encoding modes). Still the pictures do show that both encoders are well usable for many scenarios including HD processing, simultaneous data processing etc.

Test runs are as simple as taking reference video source signal of different properties, pushing it through encoder filter and either writing to a file (to inspect the footage) or to Null Renderer Filter to measure performance.

Intel® Quick Sync Video H.264 Encoder produces files like these: 720×480.mp4, 2556×1440.mp4, which are of decent quality (with respect to low bitrate and “hard to handle” background changes). NVIDIA H.264 Encoder produces somewhat better output supposedly by choosing higher bitrate. Either way, both encoders have a number of ways to fine tune the encoding process. Not just bitrate, profile, GOP length, B frame settings but even more sophisticated parameters.

Intel® Quick Sync Video H.264 Encoder MFT

CODECAPI_AVEncCommonRateControlMode: VT_UI4 0, default VT_UI4 0, modifiable // eAVEncCommonRateControlMode_CBR = 0

CODECAPI_AVEncCommonQuality: minimal VT_UI4 0, maximal VT_EMPTY, step VT_EMPTY

CODECAPI_AVEncCommonBufferSize: VT_UI4 3131961357, default VT_UI4 0, modifiable

CODECAPI_AVEncCommonMaxBitRate: default VT_UI4 0

CODECAPI_AVEncCommonMeanBitRate: VT_UI4 3131961357, default VT_UI4 2222000, modifiable

CODECAPI_AVEncCommonQualityVsSpeed: VT_UI4 50, default VT_UI4 50, modifiable

CODECAPI_AVEncH264CABACEnable: modifiable

CODECAPI_AVEncMPVDefaultBPictureCount: VT_UI4 0, default VT_UI4 0, modifiable

CODECAPI_AVEncMPVGOPSize: VT_UI4 128, default VT_UI4 128, modifiable

CODECAPI_AVEncVideoEncodeQP:

CODECAPI_AVEncVideoForceKeyFrame: VT_UI4 0, default VT_UI4 0, modifiable

CODECAPI_AVLowLatencyMode: VT_BOOL 0, default VT_BOOL 0, modifiable

CODECAPI_AVEncVideoLTRBufferControl: VT_UI4 65536, values { VT_UI4 65536, VT_UI4 65537, VT_UI4 65538, VT_UI4 65539, VT_UI4 65540, VT_UI4 65541, VT_UI4 65542, VT_UI4 65543, VT_UI4 65544, VT_UI4 65545, VT_UI4 65546, VT_UI4 65547, VT_UI4 65548, VT_UI4 65549, VT_UI4 65550, VT_UI4 65551, VT_UI4 65552 }, modifiable

CODECAPI_AVEncVideoMarkLTRFrame:

CODECAPI_AVEncVideoUseLTRFrame:

CODECAPI_AVEncVideoEncodeFrameTypeQP: default VT_UI8 111670853658, minimal VT_UI8 0, maximal VT_UI8 219046674483, step VT_UI8 1

CODECAPI_AVEncSliceControlMode: VT_UI4 0, default VT_UI4 2, minimal VT_UI4 2, maximal VT_UI4 2, step VT_UI4 0, modifiable

CODECAPI_AVEncSliceControlSize: VT_UI4 0, minimal VT_UI4 0, maximal VT_UI4 8160, step VT_UI4 1, modifiable

CODECAPI_AVEncVideoMaxNumRefFrame: minimal VT_UI4 0, maximal VT_UI4 16, step VT_UI4 1, modifiable

CODECAPI_AVEncVideoTemporalLayerCount: default VT_UI4 1, minimal VT_UI4 1, maximal VT_UI4 3, step VT_UI4 1, modifiable

CODECAPI_AVEncMPVDefaultBPictureCount: VT_UI4 0, default VT_UI4 0, modifiable

NVIDIA H.264 Encoder MFT

CODECAPI_AVEncCommonRateControlMode: VT_UI4 0

CODECAPI_AVEncCommonQuality: VT_UI4 65

CODECAPI_AVEncCommonBufferSize: VT_UI4 8923353

CODECAPI_AVEncCommonMaxBitRate: VT_UI4 8923353

CODECAPI_AVEncCommonMeanBitRate: VT_UI4 2974451

CODECAPI_AVEncCommonQualityVsSpeed: VT_UI4 33

CODECAPI_AVEncH264CABACEnable: VT_BOOL -1

CODECAPI_AVEncMPVGOPSize: VT_UI4 50

CODECAPI_AVEncVideoEncodeQP: VT_UI8 26

CODECAPI_AVEncVideoForceKeyFrame:

CODECAPI_AVEncVideoMinQP: VT_UI4 0, minimal VT_UI4 0, maximal VT_UI4 51, step VT_UI4 1

CODECAPI_AVLowLatencyMode: VT_BOOL 0

CODECAPI_AVEncVideoLTRBufferControl: VT_UI4 0, values { VT_I4 65537, VT_I4 65538 }

CODECAPI_AVEncVideoMarkLTRFrame:

CODECAPI_AVEncVideoUseLTRFrame:

CODECAPI_AVEncVideoEncodeFrameTypeQP: VT_UI8 111670853658

CODECAPI_AVEncSliceControlMode: VT_UI4 2, minimal VT_UI4 0, maximal VT_UI4 2, step VT_UI4 1

CODECAPI_AVEncSliceControlSize: VT_UI4 0, minimal VT_UI4 0, maximal VT_UI4 3, step VT_UI4 1

CODECAPI_AVEncVideoMaxNumRefFrame: VT_UI4 1, minimal VT_UI4 0, maximal VT_UI4 16, step VT_UI4 1

CODECAPI_AVEncVideoMeanAbsoluteDifference: VT_UI4 0

CODECAPI_AVEncVideoMaxQP: VT_UI4 51, minimal VT_UI4 0, maximal VT_UI4 51, step VT_UI4 1

CODECAPI_AVEncVideoROIEnabled: VT_UI4 0

CODECAPI_AVEncVideoTemporalLayerCount: minimal VT_UI4 1, maximal VT_UI4 3, step VT_UI4 1

Important property of hardware encoder is that even that it does consume some of CPU time, the most of the complexity is offloaded to video hardware. In all single stream test runs, the eight-core CPU was loaded not more than 30% including time required to synthesize the image using WIC and Direct2D and convert it to YUV format using CPU. That is, offloading video encoding to GPU is a convenient way to free CPU for real time video processing applications.

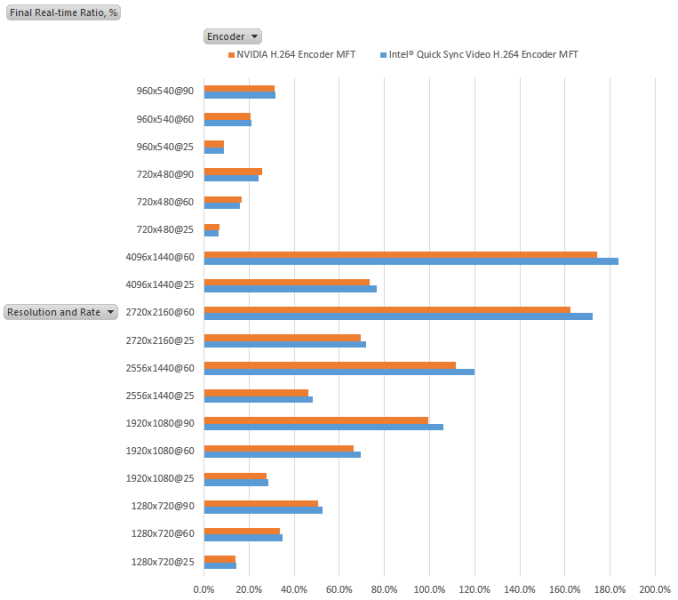

I was mostly interested in how the encoders are in terms of being able to process real time data, esp. so that they are applied to record lengthy sessions. Both encoders appear to be fast enough to crack 1920×1080 HD video at frame rates up to 60 and higher. The test did encoding at highest rate possible and 100% number on the charts corresponds to situation that it took one second to synthesize and encode one second of video no matter what effective CPU/GPU load is. That is, values less than 100% indicate ability to encode video content in real time right away.

Basically, the numbers show that both encoders are fast enough to reliably encode 1080p60 stream.

Looking at it from another standpoint of being able to process two or more H.264 encoding sessions at once, encoder from NVidia has an important limitation of two sessions per system (supposedly related thread – for this or another reason test run with three streams fails).

Both encoders are hardly suitable for reliable encoding of two 1080p60 streams simultaneously (or perhaps some fine tuning might make things faster by choosing appropriate encoding mode). However both look fine for encoding 1080p and lower resolution stream. Clearly, Intel’s encoder can be used to encoder multiple low resolution streams in parallel or mix real time encoding with background encoding (provided that background encoding is throttled to let the real time stream run fast enough). If otherwise real-time encoding is not necessary, both encoders can do the job as well, and with Nvidia the application needs to make sure that only two sessions are running simultaneously, Intel’s encoder can be used in a more flexible way.

Also, Nvidia’s encoder is slightly faster, however Intel’s allow 3+ concurrently encoded stream and also allows to supply RGB input directly without converting to YUV.

There is also Intel® Hardware H265 Encoder MFT available for H.265 encoding, but this is going to be another story some time later.