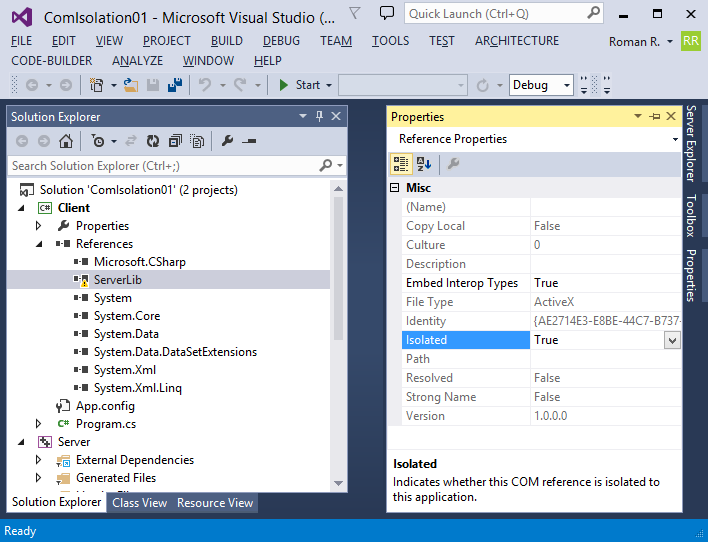

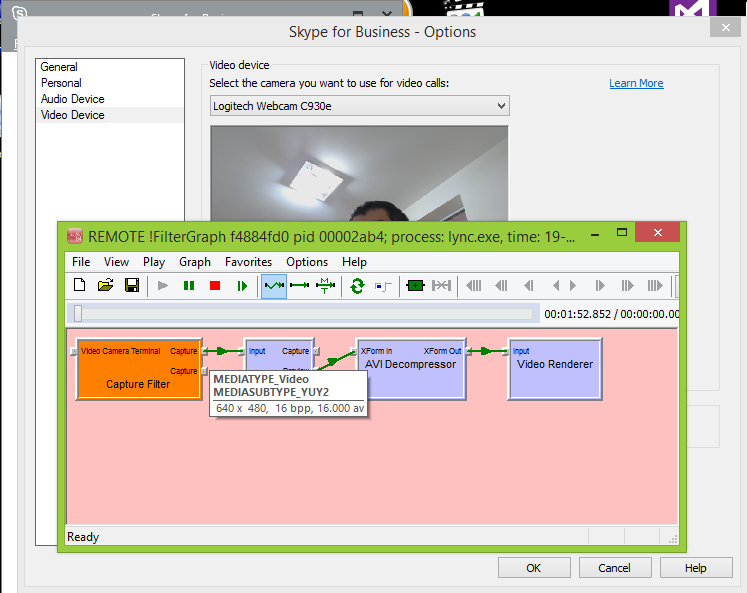

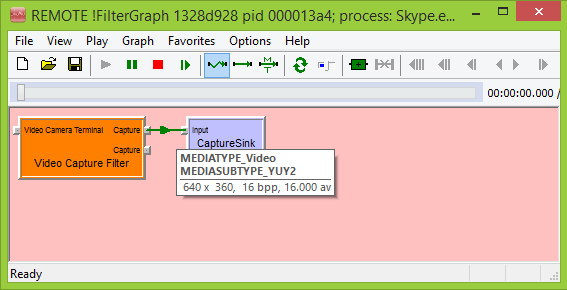

Visual Studio offers COM reference isolation to applications so that COM dependency is used in a usual way, and in the same time there is no need in its registration or another copy of the COM server might be registered system wide, or using per-user registration, and the application would still prefer a local copy of COM server.

The advantage is obvious: no more COM registration hell, and the application can be distributed with lowered risk of conflicts with other installed software, without a risk to affect other applications by registering an unwanted piece of software. Also, with an option to use COM dependency without need of elevated privileges to perform COM registration.

The feature is using reg-free COM and is not new. Articles on internet on using the feature date back to 2007 and earlier (e.g. Isolated COM), reg-free COM existed earlier. The feature is cool and offers a one click access to an incredibly powerful option with complicated technology underneath.

Problem 1: It is 2015 fall today and Visual Studio 2013 still does not have this – as complicated as Enabled/Disabled option – working right.

Once enabled, the option has the following effect on the project:

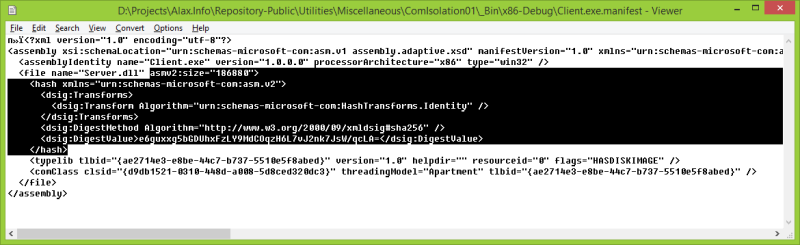

- the manifest file is detached from the binary and is written to external file (Client.exe + Client.exe.manifest as opposed to Client.exe with manifest embedded as resource)

- the manifest receives assembly/file elements that establish a registration-free link to COM dependency; the content of the element is repeating registration of the COM dependency normally written into registry system wide

- the compiler uses

RegOverridePredefKeyand friends API to check COM dependency registration keys and update the manifest file (see previous item) respectively

Apparently the COM server has to be registered at compile time, so that compiler could convert the registration into manifest file. For whatever reason, Visual Studio 2013 looks for 32-bit COM server when it is doing 64-bit build. That is, building x64 configuration with x64 COM server registered and supposed to be used further fails if you don’t have a similar Win32 COM server registered. Bummer.

This simple solution ComIsolation01 (Trac, SVN) has two projects: C++ COM server with 32 and 64 bit configurations, and C# client consumer. A build of Debug/Release x64 configuration successfully builds Server.dll, registers it, then attempts to build Client.exe and fails:

2>C:\Program Files (x86)\MSBuild\12.0\bin\Microsoft.Common.CurrentVersion.targets(2234,5): warning MSB3284: Cannot get the file path for type library “ae2714e3-e8be-44c7-b737-5510e5f8abed” version 1.0. Library not registered. (Exception from HRESULT: 0x8002801D (TYPE_E_LIBNOTREGISTERED))

2>D:\Projects\Alax.Info\Repository-Public\Utilities\Miscellaneous\ComIsolation01\Client\Program.cs(14,13,14,22): error CS0246: The type or namespace name ‘ServerLib’ could not be found (are you missing a using directive or an assembly reference?)

2>D:\Projects\Alax.Info\Repository-Public\Utilities\Miscellaneous\ComIsolation01\Client\Program.cs(14,51,14,60): error CS0246: The type or namespace name ‘ServerLib’ could not be found (are you missing a using directive or an assembly reference?)

TYPE_E_LIBNOTREGISTERED, really? Because it looks for 32-bit type library and there is only 64-bit one registered. Build Win32 configuration once, and x64 builds are fixed. In other aspects, x64 build of Server.dll is correctly picked up.

Problem 2: Inflexible. The only COM reference isolation offered is a link with size and hash specification of the dependency.

Why on earth? Okay it might be good for some people, perhaps. The only scenario I want to ever use is a link without checks for whether dependency is exactly as at build time. It is already isolated and the isolated file will be picked up. I would like to retain an option to patch it quickly by simply substituting a new file there, without an annoying need to patch manifest respectively. I don’t have an option like this.

Another post soon will show a solution for the problem, as well as easy way to apply isolation to C++ clients as well.