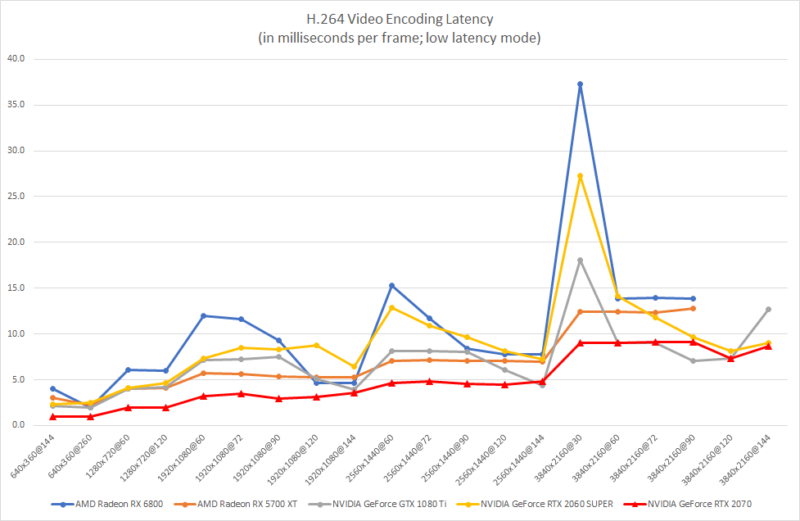

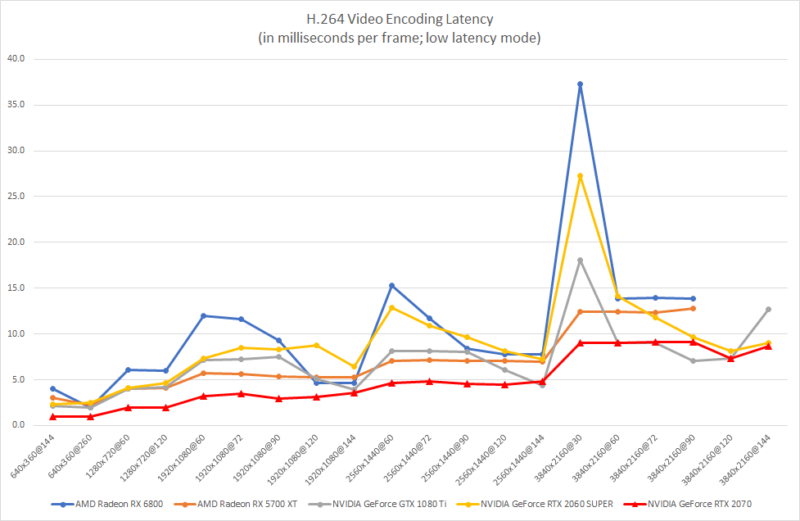

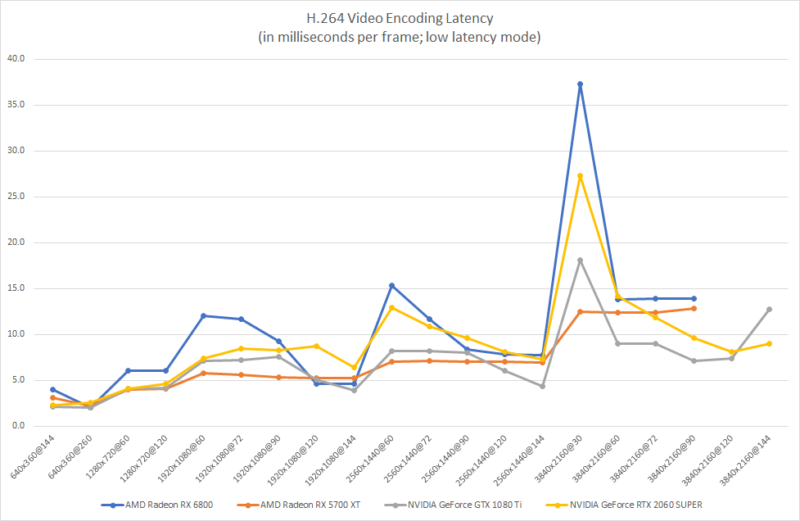

Following previous post on AMD hardware video encoders, this shows how better NVIDIA hardware is in comparison.

And they sell successor hardware series already!

// Oprogramowanie Roman Ryltsov

Following previous post on AMD hardware video encoders, this shows how better NVIDIA hardware is in comparison.

And they sell successor hardware series already!

AMD is not seemingly making any progress in improving video encoding ASICs on their video cards. New stuff looks pretty depressing.

AMD Radeon RX 5700 XT was a bit of a move forward, a bit. New series look about the same but even slower a bit, however it is quite clear that existing cheaper NVIDIA offering beats the hell out of new AMD gear.

Not to even mention that NVIDIA cards are capable to handle larger resolutions, where AMD’s bar is at 3840×2160@90.

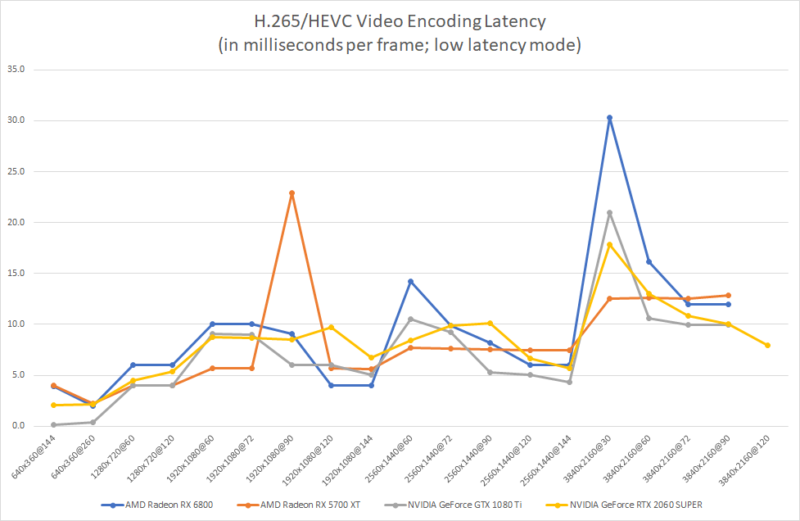

H.265/HEVC looks pretty similar:

interface DECLSPEC_UUID("ac6b7889-0740-4d51-8619-905994a55cc6") DECLSPEC_NOVTABLE

IRtwqAsyncResult : public IUnknown

{

STDMETHOD(GetState)( _Out_ IUnknown** ppunkState);

STDMETHOD(GetStatus)();

STDMETHOD(SetStatus)( HRESULT hrStatus);

STDMETHOD(GetObject)( _Out_ IUnknown ** ppObject);

STDMETHOD_(IUnknown *, GetStateNoAddRef)();

};

interface DECLSPEC_UUID("a27003cf-2354-4f2a-8d6a-ab7cff15437e") DECLSPEC_NOVTABLE

IRtwqAsyncCallback : public IUnknown

{

STDMETHOD(GetParameters)( _Out_ DWORD* pdwFlags, _Out_ DWORD* pdwQueue );

STDMETHOD(Invoke)( _In_ IRtwqAsyncResult* pAsyncResult );

};

Interface methods lack pure specifiers. This might be OK for some development but once you try to inherit your handler class from public winrt::implements<AsyncCallback, IRtwqAsyncCallback> you are in trouble!

1>Foo.obj : error LNK2001: unresolved external symbol "public: virtual long __cdecl IRtwqAsyncCallback::GetParameters(unsigned long *,unsigned long *)" (?GetParameters@IRtwqAsyncCallback@@UEAAJPEAK0@Z) 1>Foo.obj : error LNK2001: unresolved external symbol "public: virtual long __cdecl IRtwqAsyncCallback::Invoke(struct IRtwqAsyncResult *)" (?Invoke@IRtwqAsyncCallback@@UEAAJPEAUIRtwqAsyncResult@@@Z)

The problem exists in current Windows 10 SDK and since 10.0.18362.0 at the very least.

Continue reading →Added a few more resolutions to NvcEncode tool. Resolutions above 4K are tried with H.264 codec but they are expected to not work since H.264 codec is limited to resolutions up to 4096 pixels in width or height. So the new ones apply to H.265/HEVC. They work pretty well on NVIDIA GeForce RTX 2060 SUPER:

640x360@144 2.10

640x360@260 2.16

1280x720@60 4.50

1280x720@120 5.38

1280x720@260 5.33

1920x1080@60 8.78

1920x1080@72 8.67

1920x1080@90 8.50

1920x1080@120 9.69

1920x1080@144 6.74

1920x1080@260 4.20

2560x1440@60 8.45

2560x1440@72 9.89

2560x1440@90 10.10

2560x1440@120 6.62

2560x1440@144 5.73

3840x2160@30 17.88

3840x2160@60 13.02

3840x2160@72 10.86

3840x2160@90 10.07

3840x2160@120 7.98

3840x2160@144 6.57

5120x2880@30 27.78

5120x2880@60 13.85

7680x4320@30 27.22

7680x4320@60 26.47

One interesting thing is – and it is too visible and consistent to be an occaisional fluctuation – is that per frame latency is lower for higher rate feeds. Most recent run has a great example of this effect:

3840x2160@30 17.88 3840x2160@60 13.02 3840x2160@72 10.86 3840x2160@90 10.07 3840x2160@120 7.98 3840x2160@144 6.57

I have an educated guess only and driver development guys are likely to have a good explanation. This is probably something NVIDIA can improve for those who want to have absolutely lowest encoding latencies.

Binaries:

More of unusual stuff: mix of Media Foundation pipeline, with a custom media source specifically, with GStreamer pipeline. It appears that with all bulkiness of GStreamer runtime, the framework remains open for integrations and flexible for a mix of pre-built and custom components.

PoC push source plugin on top of Media Foundation media session and media source is reasonably small, and is mixed in pipeline in a pretty straightforward way.

...

auto const Registry = gst_registry_get();

std::string PluginDirectory = "D:\\gstreamer\\1.18\\1.0\\msvc_x86_64\\lib\\gstreamer-1.0";

for(auto&& FileName: { "gstopenh264.dll", "gstopengl.dll" })

{

CHAR Path[MAX_PATH];

PathCombineA(Path, PluginDirectory.c_str(), FileName);

G::Error Error;

GstPlugin* Plugin = gst_plugin_load_file(Path, &Error);

assert(!Error);

assert(Plugin);

auto const Result = gst_registry_add_plugin(Registry, Plugin);

assert(Result);

}

GST_PLUGIN_STATIC_REGISTER(customsrc);

#pragma endregion

{

G::Error Error;

Gst::Element const Pipeline { gst_parse_launch("customsrc ! openh264dec ! glimagesink", &Error) };

assert(!Error);

gst_element_set_state(Pipeline.get(), GST_STATE_PLAYING);

...

As codebase and plugin development environment GStreamer is closer to DirectShow rather than Media Foundation: there is a structure of base classes for reuse, implemented, however, in C (not even C++) with, respectively, a mere amount of chaos inside.

Still, GStreamer looks cool overall and over years accumulated a bunch of useful plugins. Long list includes multiple integrations and implementation of MPEG stuff of sorts, and RTP related pieces in particular.

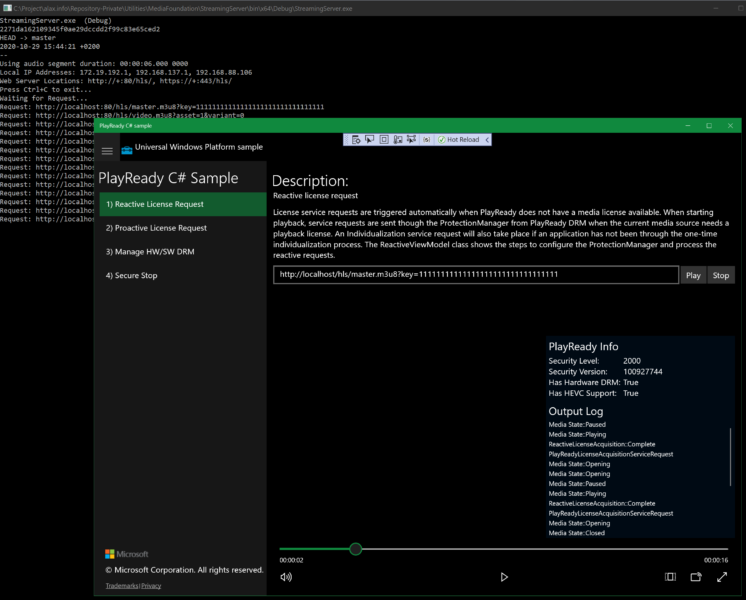

Addition of another crazy thing into internal tooling uncovered new interesting bug. An application is creating audiovisual HLS (HTTP Live Streaming – adaptive streaming developed by Apple) stream on the fly now with Microsoft PlayReady DRM encryption attached.

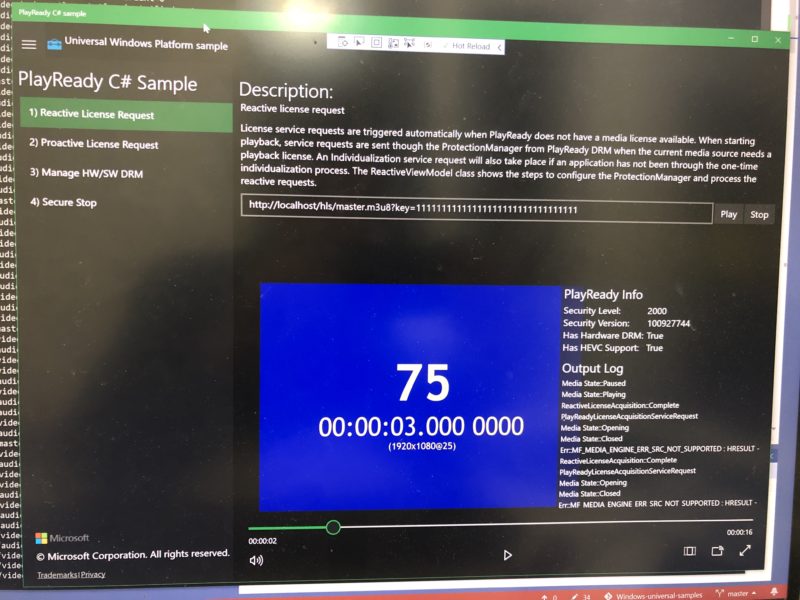

UWP samples offer a sample application for playback: PlayReady sample. The playback looks this:

Oops, no video! However, this is behavior by design: DRM-enabled video is protected on multiple layers and eventually the image cannot be captured back as a screenshot: the video is automatically removed from the view.

Physical picture of application on monitor is:

Depending on protection level, there might be HDCP enforcement as well.

Now the bug is that protected video running in UWP MediaElement is that video cannot be taken to another monitor (even though it belong to the same video adapter):

It cannot be even restarted on another monitor! Generally speaking, it is not necessarily an MediaPlayer element bug, it can as well be DXGI, for example, and it can be even NVIDIA driver bug. Even though NVIDIA software reports that both monitors can have HDCP-enabled signal, there is something missing. One interesting thing is that it is not even possible to query for HDCP status via standard API for one of the monitors, but not for the other.

PlayReady DRM itself is not a technology coming with detailed information, and its support in Windows is seriously limited in information, integration support. There is just one sample mentioned above and pretty much of related information is simply classified. There is not so much for debugging either because Microsoft intentionally limited PlayReady support to stock implementation hard to use partially, and which is running in an isolated protected process: Media Foundation Media Pipeline EXE (related keyword: PsProtectedSignerAuthenticode).

I mentioned issues in AMD’s and Intel’s video encoding related drivers, APIs and integration components. Now I switched development box video card to NVIDIA’s and immediately hit their glitch too.

NVIDIA GeForce RTX 2060 SUPER offers really fast video encoder and consumer hardware from AMD and Intel is simply nowhere near. 3840×2160@144 video can be encoded as fast as under with 10 ms per frame:

H264

640x360@144 1.09

640x360@260 1.02

1280x720@60 2.00

1280x720@120 2.00

1920x1080@60 3.26

1920x1080@72 3.30

1920x1080@90 3.26

1920x1080@120 3.29

1920x1080@144 3.77

2560x1440@60 5.23

2560x1440@72 5.22

2560x1440@90 5.33

2560x1440@120 5.71

2560x1440@144 5.75

3840x2160@30 11.00

3840x2160@60 11.33

3840x2160@72 11.32

3840x2160@90 9.41

3840x2160@120 7.62

3840x2160@144 8.54

HEVC

640x360@144 1.00

640x360@260 1.00

1280x720@60 2.05

1280x720@120 2.05

1920x1080@60 4.03

1920x1080@72 4.01

1920x1080@90 4.01

1920x1080@120 4.63

1920x1080@144 4.67

2560x1440@60 4.00

2560x1440@72 4.00

2560x1440@90 4.00

2560x1440@120 4.10

2560x1440@144 4.18

3840x2160@30 8.00

3840x2160@60 8.33

3840x2160@72 8.43

3840x2160@90 8.42

3840x2160@120 7.88

3840x2160@144 6.89However this is their hardware and API, and Media Foundation integration based on custom Media Foundation wrapper.

NVIDIA’s Media Foundation encoder transform (MFT) shipped with video driver fails to do even simple thing correctly. Encoding texture using NVIDIA MFT:

It looks like internal color space conversion taking place inside the transform is failing…

NVIDIA HEVC Encoder MFT handles the same input (textures) correctly.