2025-03-25 Update: https://iccapturecodecs.alax.info/ hosts a productized version of the codec, with demonstration/free trial option.

Truly advanced industrial cameras by The Imaging Source are provided with image acquisition software, End User Software is a free IC Capture application, which ended up with a few problems to resolve.

First of all, “the older” application is a kind of discontinued. A Windows application version 2.5 was on the website as recently as a few weeks ago, but the announcement of release of IC Capture version 4 removed the older application completely.

Another The Imaging Source IC Measure application is renamed into the name of its predecessor IC Capture with a version increment… Now IC Capture 2.5 is almost gone. OK, almost – there are still some traces: https://www-st.theimagingsource.com/en-us/support/download/iccapture-2.5.1557.4007/

The question is this: the cameras are good enough to capture video with 12-bit color depth, e.g. 33U series. Is it possible to record the original video stream in certain popular video format for use in other applications?

Under “AVI Files and Codecs Support” the new application does not seem to offer AVI support anymore, and offers recording to MP4 files with H.264 or H.265 encoding. You could give it a try and record 4000×3000@20 with 12-bit wide dynamic range into one of these formats, hopefully without frame dropping.

The previous IC Capture 2.5 application in Windows had better integration options and did offer AVI recording with interoperability with third party software. It was still quite a challenge to find a suitable codec, yet it is possible now with a specialized GoPro CineForm codec designed to work with IC Capture 2.5:

- the codec directly accepts The Imaging Source driver’s RGB64 format (original 12-bit color depth from camera without reduction to 8 bits) with WDR (wide dynamic range) option tuned on video

- the codec retains 12-bit precision in the produced output (even though the output bitrate is pretty high!)

- the codec does its best to not lose input frames, and uses the most of CPU with parallel processing, in order to save as much as possible from the original data acquisition; to give some estimate, an Intel i7 CPU of 12th generation or newer can handle the compression of WDR 4000×3000@20 video stream and enable its offline post-processing where all the data is securely recorded

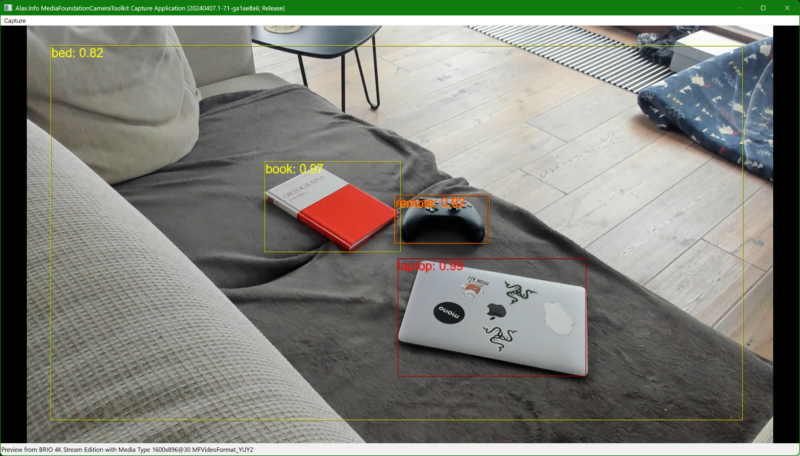

Essentially, the codec gives fine tuning to discontinued IC Capture 2.5 extending its recording abilities so that they exceed those of current IC Capture 4 software in Windows… The produced files are nicely accepted into software like DaVinci Resolve, or good old Media Player Classic Home Cinema.

The download for the pre-release of the codec is below. Requirements:

- Windows 10/11: minimal version is not identified, the software should be well compatible with a few last versions of Windows

- 64-bit Windows and 64-bit of IC Capture 2.5 (x64): there is no 32-bit build as making no much sense; IC Capture 2.5 installs both Win32 and x64 variants of the application, please use x64 and there in the codec choice UI you will be able to see additional codec option

- unregistered codec will overlay “DEMO CODEC” text, the overlay goes away with addition of valid license token for the codec (to be described later, or please get in touch for a quote)

- the codec is designed to work only with IC Capture 2.5 x64 software

Downloads are at: https://iccapturecodecs.alax.info/