I am reposting a Q+A from elsewhere on injecting raw audio data obtained externally into Windows API media pipeline (in Russian).

Q: … какой Ñамый проÑтой ÑпоÑоб превратить порции байтов в формате PCM в Ñжатый формат, например WMA иÑÐ¿Ð¾Ð»ÑŒÐ·ÑƒÑ Ñ‚Ð¾Ð»ÑŒÐºÐ¾ ÑредÑтва Windows SDK? […] Ñ Ñ‚Ð°Ðº понÑл, что без напиÑÐ°Ð½Ð¸Ñ Ñвоего фильтра DirectShow (DS) – source или capture? – поток байтов не Ñконвертировать. Ð’ Ñлучае же Media Foundation (MF) Ñ Ð½Ð°Ð´ÐµÑлÑÑ Ð½Ð°Ð¹Ñ‚Ð¸ пример в инете, но почему-то еÑÑ‚ÑŒ хороший пример запиÑи loopback в WAV файл или конвертации из WAV в WMA, но иÑпользование промежуточного файла очень неÑффективно, тем более что Ñледующей задачей будет Ð¿Ð¾Ñ‚Ð¾ÐºÐ¾Ð²Ð°Ñ Ð¿ÐµÑ€ÐµÐ´Ð°Ñ‡Ð° Ñтого звука по Ñети параллельно Ñ Ð·Ð°Ð¿Ð¸Ñью в файл. Ð¡ÐµÐ¹Ñ‡Ð°Ñ Ñ Ð¿Ñ‹Ñ‚Ð°ÑŽÑÑŒ разобратьÑÑ Ñ IMFTransform::ProcessInput, но он требует на вход не байты, а IMFSample, а конкретных примеров затащить байты в IMFSample Ñ Ð¿Ð¾ÐºÐ° не нашёл. ПроÑто у Ð¼ÐµÐ½Ñ ÑложилоÑÑŒ впечатление, что и DS и MF Ð´Ð»Ñ Ñ‚Ð°ÐºÐ¾Ð¹, казалоÑÑŒ бы, проÑтой задачи требуют ÑÐ¾Ð·Ð´Ð°Ð½Ð¸Ñ COM-объектов да ещё и их региÑтрацию в ÑиÑтеме. Ðеужто нет более проÑтого ÑпоÑоба?

A: Готового Ñ€ÐµÑˆÐµÐ½Ð¸Ñ Ð´Ð»Ñ Ð²Ñ‚Ð°Ð»ÐºÐ¸Ð²Ð°Ð½Ð¸Ñ Ð´Ð°Ð½Ð½Ñ‹Ñ… в тракт DS или MF нет. Сделать ÑамоÑтоÑтельно необходимую Ñтыковку – довольно поÑÐ¸Ð»ÑŒÐ½Ð°Ñ Ð·Ð°Ð´Ð°Ñ‡Ð° и поÑтому, видимо, Microsoft уÑтранилиÑÑŒ в предоÑтавлении готового решениÑ, которое вÑÑ‘ равно не каждому подойдёт по разным причинам.

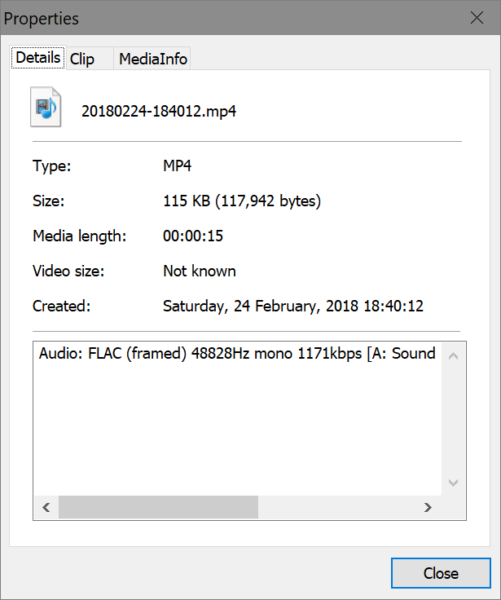

Ðудиопоток – Ñто вÑегда не только поток байтов, но и формат, и привÑзка ко времени, а поÑтому те компоненты, которые работают Ñ Ð±Ð°Ð¹Ñ‚Ð°Ð¼Ð¸, обычно оперируют мультиплекÑированными форматами (типа .WAV, к примеру). Раз у Ð²Ð°Ñ Ð¸Ð¼ÐµÐ½Ð½Ð¾ порции PCM данных, то Ñто задача длÑ, дейÑтвительно, или custom DirectShow source filter, или custom Media Foundation media/stream source. Их Ñ€ÐµÐ°Ð»Ð¸Ð·Ð°Ñ†Ð¸Ñ Ð´Ð°ÑÑ‚ вам необходимую Ñклейку и, вообще говорÑ, Ñто и еÑÑ‚ÑŒ проÑтой ÑпоÑоб. Ð’ чаÑтноÑти, он куда проще, чем попытатьÑÑ Ñделать Ñто через файл.

Ðи в Ñлучае DS, ни в Ñлучае MF не требуетÑÑ Ñ€ÐµÐ³Ð¸ÑÑ‚Ñ€Ð°Ñ†Ð¸Ñ Ð² ÑиÑтеме. Можно, конечно, и Ñ Ð½ÐµÐ¹, но Ñто необÑзательно. Когда у Ð²Ð°Ñ Ñ€ÐµÐ°Ð»Ð¸Ð·Ð¾Ð²Ð°Ð½ необходимый клаÑÑ, то ÑÐ¾Ð±Ð¸Ñ€Ð°Ñ Ñ‚Ð¾Ð¿Ð¾Ð»Ð¾Ð³Ð¸ÑŽ его можно иÑпользовать непоÑредÑтвенно, без Ð²ÐºÐ»ÑŽÑ‡ÐµÐ½Ð¸Ñ Ð² топологию через ÑиÑтемную региÑтрацию.

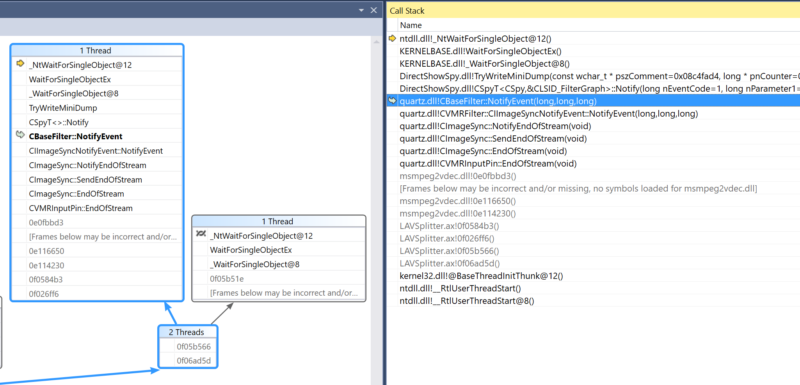

Ð’ Ñлучае DS вам нужно Ñделать ÑобÑтвенный audio source filter. Ð¡Ð»Ð¾Ð¶Ð½Ð°Ñ Ñ‡Ð°ÑÑ‚ÑŒ задачи заключаетÑÑ Ð² том, что вам придётÑÑ Ð¾Ð¿ÐµÑ€ÐµÑ‚ÑŒÑÑ Ð½Ð° довольно Ñтарый code base (DirectShow base classes) и в том, что, как бы там ни было, DirectShow API – в конце Ñвоего жизненного пути. Тем не менее, в Ñтарых SDK еÑÑ‚ÑŒ пример Synth Filter Sample, еÑÑ‚ÑŒ еще пример Ball Filter Sample Ð´Ð»Ñ Ð²Ð¸Ð´ÐµÐ¾ и другие, которые показывают как Ñделать source filter и, чеÑтно говорÑ, они довольно компактны. Ðеобходимый вам фильтр будет доÑтаточно проÑтым, когда вы разберётеÑÑŒ что к чему. по иÑпользованию фильтра без региÑтрации вы также Ñможете найти информацию, к примеру, отÑюда Using a DirectShow filter without registering it, via a private CoCreateInstance.

Ð’ Ñлучае MF, ÑÐ¸Ñ‚ÑƒÐ°Ñ†Ð¸Ñ Ð² какой-то мере ÑхожаÑ. Можно было бы, конечно, формировать в памÑти поток формата .WAV и передавать его в топологию MF как поток байтов. Ð¢Ð°ÐºÐ°Ñ Ð²Ð¾Ð·Ð¼Ð¾Ð¶Ð½Ð¾ÑÑ‚ÑŒ и гибкоÑÑ‚ÑŒ API имеетÑÑ, но Ñ Ð±Ñ‹ поÑоветовал также иÑпользовать ÑобÑтвенный media source который генерирует поток данных PCM из тех куÑков, которые вы в него подкладываете. К преимущеÑтвам MF отноÑитÑÑ Ñ‚Ð¾, что Ñто более новое и текущее API, у которого шире охват на Ñовременных платформах. Возможно, также, что необходимый код вы Ñможете Ñделать на C#, еÑли опÑÑ‚ÑŒ же в Ñтом еÑÑ‚ÑŒ нужда. Плохие новоÑти заключаютÑÑ Ð² том, что по Ñвоей Ñтруктуре такой COM клаÑÑ Ð±ÑƒÐ´ÐµÑ‚ определенно Ñложнее и понадобитÑÑ Ñ‡ÑƒÑ‚ÑŒ глубже копнуть API. Информации и примеров немного, и кроме Ñтого Ñам MF едва ли предлагает лучшие и/или более понÑтные возможноÑти по Ñтандартным кодекам, возможноÑти отправлÑÑ‚ÑŒ данные в файл и Ñеть, по инÑтрументам разработки. Ближайший пример из SDK, будет, видимо, MPEG1Source Sample и, как мне кажетÑÑ, в нём непроÑто Ñходу разобратьÑÑ.

ЕÑли у Ð²Ð°Ñ Ð½ÐµÑ‚ конкретных предпочтений в плане API, то Ð´Ð»Ñ Ñтой задачи и Ñ ÑƒÑ‡Ñ‘Ñ‚Ð¾Ð¼ опиÑанной вами Ñитуации Ñ Ð±Ñ‹ предложил DirectShow. Однако еÑли помимо опиÑанного вопроÑа у Ð²Ð°Ñ ÐµÑÑ‚ÑŒ причины, ограничениÑ, оÑÐ½Ð¾Ð²Ð°Ð½Ð¸Ñ Ð¿Ð¾ которым необходимо иÑпользовать Media Foundation, то в таком Ñлучае, возможно, будет предпочтительнее разрабатывать и обработку аудио данных в рамках Ñтого API. ВмеÑте Ñ Ñ‚ÐµÐ¼ Ñоздание иÑточников данных Ð´Ð»Ñ Ð¾Ð±Ð¾Ð¸Ñ… API, как Ñ Ð½Ð°Ð¿Ð¸Ñал Ñначала, ÑвлÑÑŽÑ‚ÑÑ Ð²Ð¿Ð¾Ð»Ð½Ðµ поÑильной задачей и будут работать надёжно и Ñффективно.