Even though AMD H.264 Video Encoder Media Foundation Transform (MFT) AKA AMDh264Encoder is, generally, a not so bad done piece of software, it still has a few awkward bugs to mention. At this time I am going to show this one: the video encoder transform fails to acquire synchronization on input textures.

The problem comes up when keyed mutex aware textures knock the input door of the transform. The Media Foundation samples carry textures created with D3D11_RESOURCE_MISC_SHARED_KEYEDMUTEX flag, MSDN describes this way:

[…] You can retrieve a pointer to the

IDXGIKeyedMutexinterface from the resource by usingIUnknown::QueryInterface. TheIDXGIKeyedMutexinterface implements theIDXGIKeyedMutex::AcquireSyncandIDXGIKeyedMutex::ReleaseSyncAPIs to synchronize access to the surface. The device that creates the surface, and any other device that opens the surface by usingOpenSharedResource, must callIDXGIKeyedMutex::AcquireSyncbefore they issue any rendering commands to the surface. When those devices finish rendering, they must callIDXGIKeyedMutex::ReleaseSync. […]

Video encoder MFT is supposed to pay attention to the flag and acquire synchronization before the video frame is taken to encoding. AMD implementation fails to do so and it is a bug, a pretty important one and it has been around for a while.

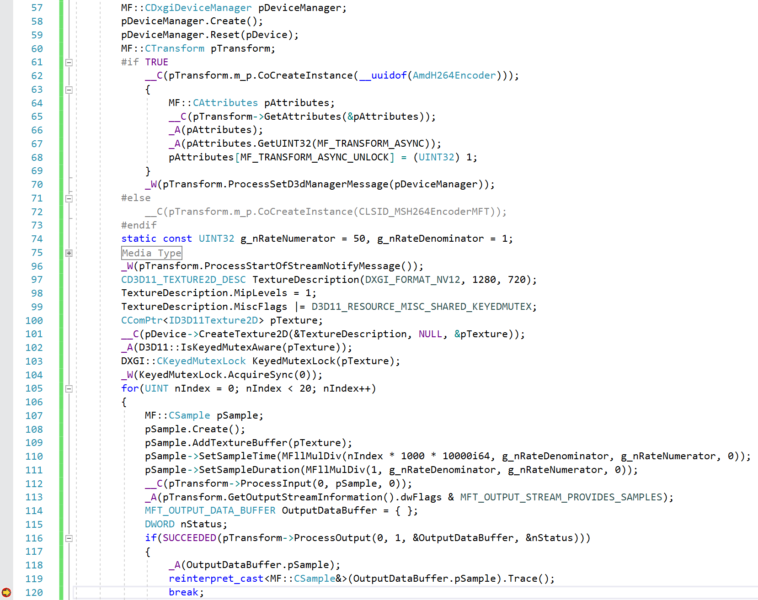

The following code snippet (see also text at the bottom of the post) demonstrates the incorrect behavior of the transform.

Execution reaches the breakpoint position and produces a H.264 sample even though input texture fed into transform is made inaccessible by AcquireSync call in line 104.

By contrast, Microsoft’s H.264 Video Encoder implementation AKA CLSID_MSH264EncoderMFT implements correct behavior and triggers DXGI_ERROR_INVALID_CALL (0x887A0001) failure in line 112.

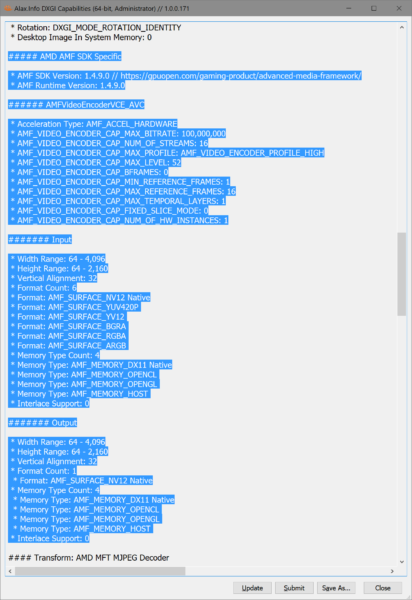

In the process of doing the SSCCE above and writing the blog post I hit another AMD MFT bug, which is perhaps less important but still showing the internal implementation inaccuracy.

An attempt to send MFT_MESSAGE_NOTIFY_START_OF_STREAM message in line 96 above without input and output media types set triggers a memory access violation:

‘Application.exe’ (Win32): Loaded ‘C:\Windows\System32\DriverStore\FileRepository\c0334550.inf_amd64_cd83b792de8abee9\B334365\atiumd6a.dll’. Symbol loading disabled by Include/Exclude setting.

‘Application.exe’ (Win32): Loaded ‘C:\Windows\System32\DriverStore\FileRepository\c0334550.inf_amd64_cd83b792de8abee9\B334365\atiumd6t.dll’. Symbol loading disabled by Include/Exclude setting.

‘Application.exe’ (Win32): Loaded ‘C:\Windows\System32\DriverStore\FileRepository\c0334550.inf_amd64_cd83b792de8abee9\B334365\amduve64.dll’. Symbol loading disabled by Include/Exclude setting.

Exception thrown at 0x00007FF81FC0E24B (AMDh264Enc64.dll) in Application.exe: 0xC0000005: Access violation reading location 0x0000000000000000.